28.1: Decision Trees in Python

- Page ID

- 88805

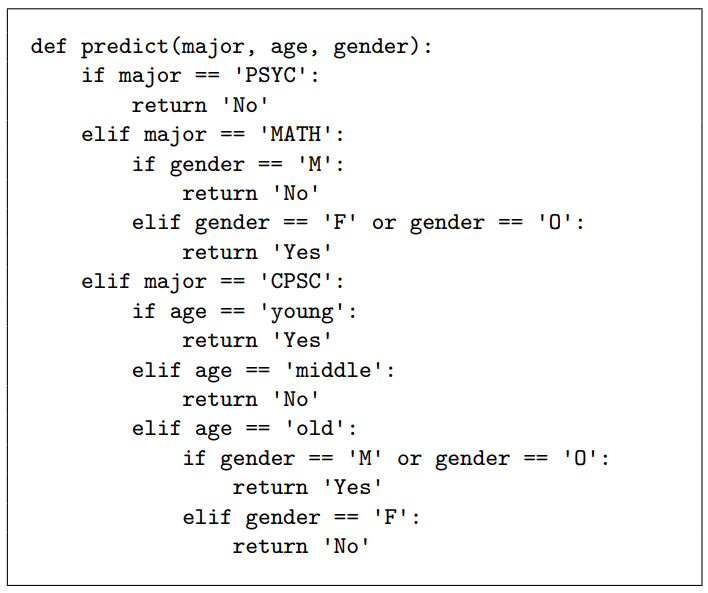

Our decision tree pictures from chapter 27 were quite illustrative, but of course to actually automate something, we have to write code rather than draw pictures. What would Figure 27.1 (p. 273) look like in Python code? It’s actually pretty simple, although there’s a lot of nested indentation. See if you can follow the flow in Figure 28.1.1.

Here we’re defining a function called predict() that takes three arguments, one for each feature value. The eye-popping set of if/elif/else statements looks daunting at first, but when you scrutinize it you’ll realize it perfectly reflects the structure of the purple diagram. Each time we go down one level of the tree, we indent one tab to the right. The body of the “if major == 'PSYC':” statement is very short because the left-most branch of the tree (for Psychology) is very simple. The “elif major == 'CPSC':” body, by contrast, has lots of nested internal structure precisely because the right-most branch of the tree (for Computer Science) is complex. Etc.

Figure \(\PageIndex(1)\): A Python implementation of the decision tree in Figure 27.2.1

If we call this function, it will give us exactly the same predictions we calculated by hand on p. 273:

Code \(\PageIndex{1}\) (Python):

print(predict('PSYC','M','old'))

print(predict('MATH','O','young'))

print(predict('CPSC','F','old'))

Output:

| No

| Yes

| No