Chapter 1: Importance of Statistics and Experimental Measurements

- Page ID

- 98227

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\dsum}{\displaystyle\sum\limits} \)

\( \newcommand{\dint}{\displaystyle\int\limits} \)

\( \newcommand{\dlim}{\displaystyle\lim\limits} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\(\newcommand{\avec}{\mathbf a}\) \(\newcommand{\bvec}{\mathbf b}\) \(\newcommand{\cvec}{\mathbf c}\) \(\newcommand{\dvec}{\mathbf d}\) \(\newcommand{\dtil}{\widetilde{\mathbf d}}\) \(\newcommand{\evec}{\mathbf e}\) \(\newcommand{\fvec}{\mathbf f}\) \(\newcommand{\nvec}{\mathbf n}\) \(\newcommand{\pvec}{\mathbf p}\) \(\newcommand{\qvec}{\mathbf q}\) \(\newcommand{\svec}{\mathbf s}\) \(\newcommand{\tvec}{\mathbf t}\) \(\newcommand{\uvec}{\mathbf u}\) \(\newcommand{\vvec}{\mathbf v}\) \(\newcommand{\wvec}{\mathbf w}\) \(\newcommand{\xvec}{\mathbf x}\) \(\newcommand{\yvec}{\mathbf y}\) \(\newcommand{\zvec}{\mathbf z}\) \(\newcommand{\rvec}{\mathbf r}\) \(\newcommand{\mvec}{\mathbf m}\) \(\newcommand{\zerovec}{\mathbf 0}\) \(\newcommand{\onevec}{\mathbf 1}\) \(\newcommand{\real}{\mathbb R}\) \(\newcommand{\twovec}[2]{\left[\begin{array}{r}#1 \\ #2 \end{array}\right]}\) \(\newcommand{\ctwovec}[2]{\left[\begin{array}{c}#1 \\ #2 \end{array}\right]}\) \(\newcommand{\threevec}[3]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \end{array}\right]}\) \(\newcommand{\cthreevec}[3]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \end{array}\right]}\) \(\newcommand{\fourvec}[4]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \\ #4 \end{array}\right]}\) \(\newcommand{\cfourvec}[4]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \\ #4 \end{array}\right]}\) \(\newcommand{\fivevec}[5]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \\ #4 \\ #5 \\ \end{array}\right]}\) \(\newcommand{\cfivevec}[5]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \\ #4 \\ #5 \\ \end{array}\right]}\) \(\newcommand{\mattwo}[4]{\left[\begin{array}{rr}#1 \amp #2 \\ #3 \amp #4 \\ \end{array}\right]}\) \(\newcommand{\laspan}[1]{\text{Span}\{#1\}}\) \(\newcommand{\bcal}{\cal B}\) \(\newcommand{\ccal}{\cal C}\) \(\newcommand{\scal}{\cal S}\) \(\newcommand{\wcal}{\cal W}\) \(\newcommand{\ecal}{\cal E}\) \(\newcommand{\coords}[2]{\left\{#1\right\}_{#2}}\) \(\newcommand{\gray}[1]{\color{gray}{#1}}\) \(\newcommand{\lgray}[1]{\color{lightgray}{#1}}\) \(\newcommand{\rank}{\operatorname{rank}}\) \(\newcommand{\row}{\text{Row}}\) \(\newcommand{\col}{\text{Col}}\) \(\renewcommand{\row}{\text{Row}}\) \(\newcommand{\nul}{\text{Nul}}\) \(\newcommand{\var}{\text{Var}}\) \(\newcommand{\corr}{\text{corr}}\) \(\newcommand{\len}[1]{\left|#1\right|}\) \(\newcommand{\bbar}{\overline{\bvec}}\) \(\newcommand{\bhat}{\widehat{\bvec}}\) \(\newcommand{\bperp}{\bvec^\perp}\) \(\newcommand{\xhat}{\widehat{\xvec}}\) \(\newcommand{\vhat}{\widehat{\vvec}}\) \(\newcommand{\uhat}{\widehat{\uvec}}\) \(\newcommand{\what}{\widehat{\wvec}}\) \(\newcommand{\Sighat}{\widehat{\Sigma}}\) \(\newcommand{\lt}{<}\) \(\newcommand{\gt}{>}\) \(\newcommand{\amp}{&}\) \(\definecolor{fillinmathshade}{gray}{0.9}\)Here you can find pre-recorded lecture videos that cover this topic here: https://youtube.com/playlist?list=PL...EANc_k_3z5Y5n8

1.1 Performing Error and Data Analysis in the Lab:

Here are some common comments that I will typically overhear when students are in lab and talking about data analysis

- How good is your data?

- How well does your experimental data fit the theory?

- What is the \(R^2\) value?

- What is the percent error?

While well intentioned, these are not the questions an experimentalist should ask. And before any data set can be utilized for an application the quality of the data must first be established.

For example in the first question is the student assessing the accuracy? Precision? How well it fits with theory? We must be specific and careful with our language. In terms of the second question, data is not necessarily good just because it agrees with theory. The quality of the data must be assessed before any conclusions can be drawn. What is the reason the theory and experiments disagree? Is that theory applicable in this situation? We must ask these questions!

You all probably have experience with \(R^2\) as a measure of the goodness of a fit but in this course we will do a much more thorough error analysis, we will go much deeper than \(R^2\) so we will not stop there.

Finally, never ever ever ever ever include percent error in any lab anal-ysis that we perform in this class. It is a meaningless error analysis. We never know the actual value or true value of a measurement so will not perform this error analysis.

When designing an experiment it is crucial that do the following

• Clearly defined goals and ideally graphs/figures for study

• Experimental plan, apparatus, and DAQ for collecting data

• Perform appropriate statistical analysis to effectively extract critical information from raw data

• Interpret results and draw conclusions that are statistically significant

Additionally, when we speak of quality what we mean is to find the actual or true value of the physical quantity being measured. And often this is different than the measured value of the physical quantity. The difference between the measured value and the actual or true value of a measurement is the error.

Here we encounter a problem as we cannot calculate the error exactly unless we know the true value of the quantity being measured. Instead we estimate the bounds or the likelihood that the error exceeds a specific value. For example say that 95% (19 out of 20) of the yield strength measurements will have an error less than 1 MPa. Thus the reading has an uncertainty of 1 MPa at confidence level of 95%. We will calculate confidence levels or confidence intervals when quantifying error.

As experimentalists we often work with large data sets with a large number of measurements. The error or uncertainty can be estimated with statistical tools when a large number of measurements are taken. Later on in the class we will learn how to use some software tools like Matlab, R, Python, and/or Mathematica to perform error analysis. Much more on this later, but let’s get to some basics of experimentation and measurements.

1.2 Measurements:

A measurement is a quantitative comparison between a predefined standard and a measurand.

What are some examples of different types of measurements we can make?

• Temperature

• Length

• Time

• Stress

• Strain

• Viscosity

• Force

• Torque

• ....

Measurand: the particular physical parameter begin observed and quantified, i.e., the input quantity of the measuring process

Standard: has a defined relationship to a unit of measurement for a given measurand. Typically a standard will be recognized by the NIST (National Institute of Standards and Technology)

What are some examples of standards?

• Tape Measure

• Balance

• Speed of Light

• Stopwatch

• Extensometer

• ...

2.1.1 What is the significance of measurements, why do we experimentally measure parameters?

• Obtain quantitative information on the actual state of physical variables and processes (i.e. T, P, V, etc.), this is critical especially for thermodynamics and finding thermodynamic equilibrium

• Vital for control processes which require measured discrepancy between actual and desired output (i.e. temperature control on sheet annealing line)

• Daily operations (i.e. temperature controls, heating and cooling, tire gauge, oil gauge, etc.)

• Establishing costs, Ti $140/lb precision is important when dealing with tons of Ti.

• Temperature in bioreactor can influence cell growth

• Thermal conductivity, acceleration, material properties

2.2 Uncertainty in Measurements: Measurements Are Useless without Uncertainty Bounds or Error Bars

Measurements are only useful to us as engineers if we can quantify the uncertainty of a measurement. No measurement is meaningful or useful if they do not have an associated error bar. In this class and moving forward in your career as an engineer be sure to place error bars on all your reported values. Error bars are critical because no measurement is perfect. If you measure the length of a tensile specimen with a micrometer your measurement is likely to be different than that of your colleague. Thus, there will be some uncertainty in any measurement that one makes. In this section of this course we will develop a comprehensive framework for evaluating uncertainty and placing tolerances and confidence intervals on measured values. Much more on this later....

2.3 Using Correct Terminology When Assessing Measurement Quality/Apparatus Performance

• Accuracy: Difference between measured and true values. Manufacturer will specify maximum error but not confidence intervals typically.

• Precision: Difference between reported values during repeated measurements of same quantity.

• Resolution: Smallest increment of change in the measured value that can be determined by the readout scale. Often the same as precision.

• Sensitivity: The change of an instrument’s output per unit change in the measured quantity. Higher sensitivity often indicates finer resolution, better precision, and higher accuracy.

2.4 Error and Uncertainty:

Error is the difference between the measured and true value of the quantity being measured. Unfortunately, the true value can never really be known so instead we quantify the uncertainty of a measurement at specific levels of confidence. Error can be categorized into two basic categories either bias or precision error/uncertainty. We will cover uncertainty and error analysis much more in-depth in the next several lectures...but never use percent error in this course.

Error

We can begin with our discussion of error by defining the following equation

\begin{equation}

Error = x_{m} - x_{true}

\end{equation}

where \(x_m\) is the measured value and \(x_{true}\) is the true value.

When designing an experimental system or executing an experiment the experimentalist’s primary objective should be to minimize the error. After the experiment is completed we need to estimate a bound on the error with a degree of certainty or a confidence interval (i.e. 90%, 95%, 99%), hopefully you have encountered confidence intervals before but if not no worries we will discuss this in depth.

There are many different sources of inaccuracy or error however most errors can be classified as:

(1) Precision

(2) Bias

(3) Illegitimate

(4) Errors that are sometimes bias or sometimes precision errors

In this course we will spend a considerable amount of time discussing 1. Precision and 2. Bias error in great detail. But we will discuss all these sources of error and how to account for them when designing experiments or presenting your results. Let’s start with Precision Error.

2.4.1 Precision or Random Errors

I really do not like the term random error but you will occasionally see that term in literature but in this course we will use the term precision primarily.

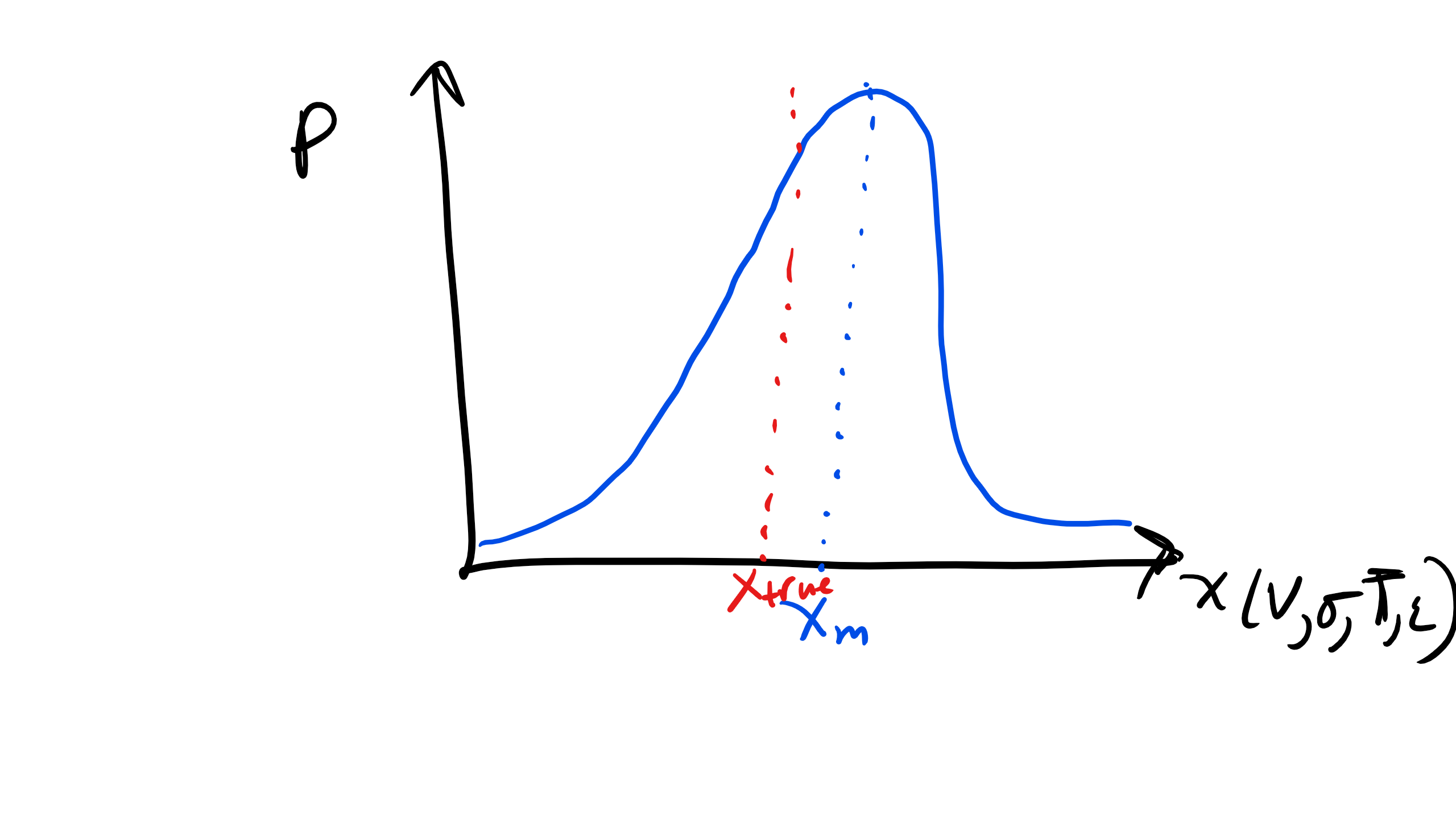

Precision errors are different for each successive measurement. Thus if you create a histogram, which shows the probability of encountering or measuring a specific measurand, the width of your distribution (hopefully it is Gaussian) will be due to precision error if that is the only source of error in your experiment. From this distribution, statistical analysis can be utilized to estimate the size of the precision error. Typically bias and precision errors occur simultaneously and contributes to the total error, more on this fun calculation later, hope you remember Taylor expansions....

Why Do We Have Precision Error?

(1) Disturbances to the equipment

(2) Fluctuating experimental conditions

(3) Insufficient measuring-system sensitivity

(4) Stochastic (Random) nature of measurements even in static conditions

Examples of Precision Error:

• Measuring temperature with thermocouple as temperature fluctuates

• Measure the length of a beam with a micrometer you will get slightly different measurements than classmates

• Changes in temperature conditions can affect swimming of bacteria

Figure \(\PageIndex{1}\): Precision Error Distribution.

Figure \(\PageIndex{1}\): Precision Error Distribution.

2.4.2 Bias or Systematic Errors

I like the term bias and systematic much better than random. However, in this course we will primarily use the term Bias error.

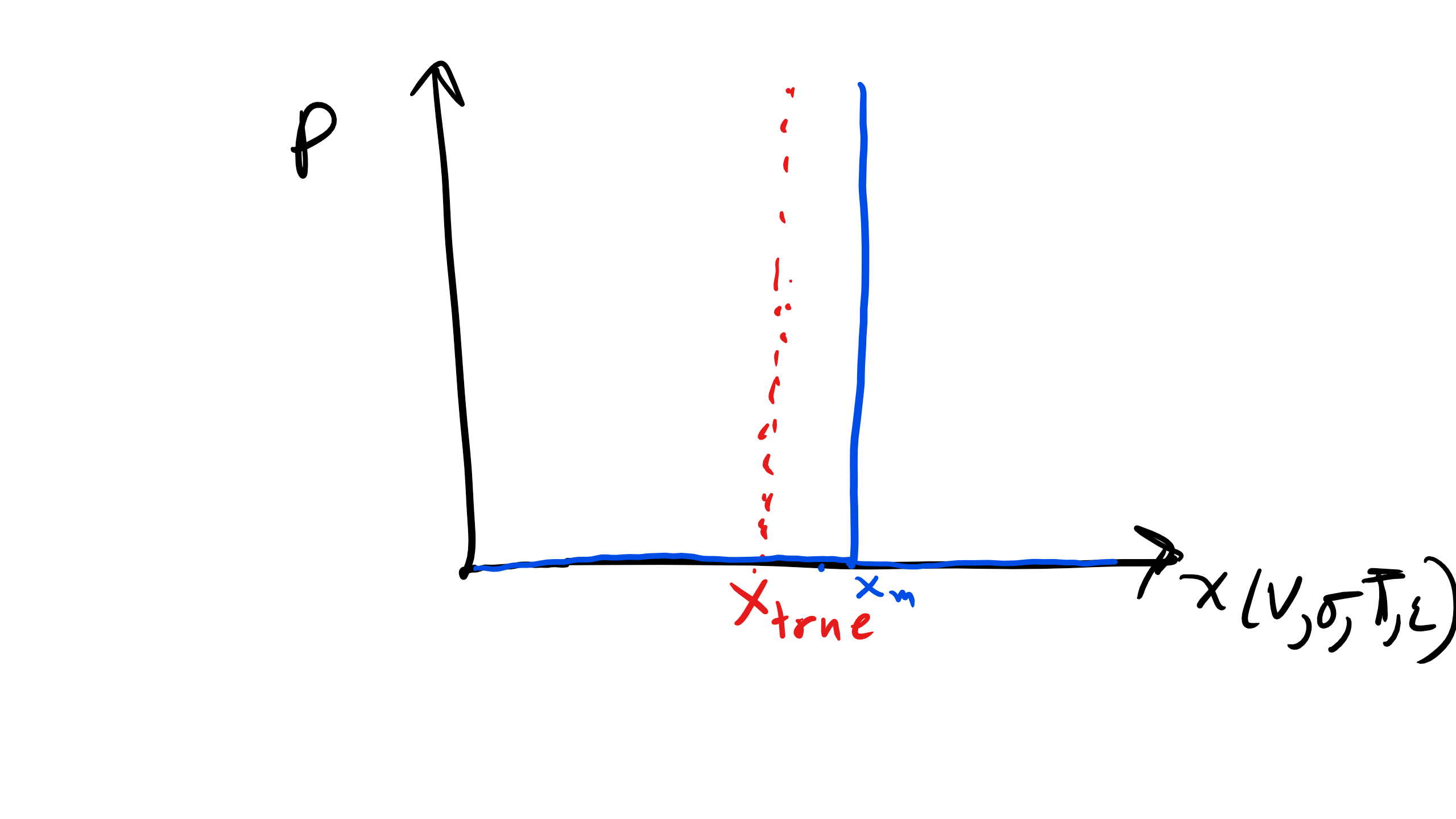

Bias errors will occur the same way each time a measurement is made. For example a balance that consistently reads 0.5 mg heavier. In some literature you might see this termed as a fixed offset error. The key thing to note about bias errors is they are fixed and thus they do not show a distribution. This is in stark contrast to precision errors which show a distribution, typically Gaussian. Therefore if there is no distribution we cannot apply any statistical analysis.

There are multiple types of bias errors:

(1) Calibration errors

• This is the most common error

• Typically calibration errors are zero-offset errors which cause all read- ing to be offset by constant amount or scale errors

• I.e. scale constantly light by 1lb or clock runs fast by 1 minute

• Can be reduced via calibration procedures and proving the system via comparison with known standard

(2) Consistently recurring human errors

• Ex. experimenter who consistently jumps the gun when recording synchronized readings either time, voltage, etc.

(3) Errors caused by defective equipment

(4) Loading errors

• i.e. not putting a DSC pan at the center of the DSC will skew temperature measurements

(5) System resolution limits

• i.e. Bias error for a ruler with cm tick marks will be cm, compared to a micrometer with 1µm resolution, that is 4 order of magnitude difference.

3. Illegitimate Error

Illegitimate error can and most likely will occur to you at some point as you perform experiments as an engineer. Illegitimate errors include

• Blunders and mistakes

• Computational or calculation errors

• Analysis errors

Figure \(\PageIndex{2}\): Bias Error Distribution

Figure \(\PageIndex{2}\): Bias Error Distribution

While these things can occur in lab you must always perform the experiment again. You cannot simply present your results in either a presentation or a form of technical writing and attribute error to illegitimate error. You must perform the experiment again.

4. Errors That Are Sometimes Bias and Sometimes Precision Error:

These are the most difficult sources of error that we must analyze as it is difficult to determine what type of error analysis must be done and which error has a distribution and what does not.

• Instrument Hysteresis

– Hysteresis error can be seen in stress strain measurements and is path dependent

• Calibration drift and variation in test or environmental conditions

– Drift occurs when the response varies over time often due to sensitivity to temperature or humidity

• Variations in procedure or definition among experimenters

Quantifying Total Uncertainty, Comprehensive Error Analysis

Again typically we are concerned with bias and precision error when evaluating experimental data. And this data comes from two classes of experiments: i) single- sample and ii) repeated-sample experiments.

A sample refers to an individual measurement of a specific quantity. Ex. Measuring strain in a material several times under identical loading is a repeated-sample experiment. A single measurement of strain is a single-sample experiment and we cannot extract the distribution of precision error in this case. In this course and in your career as an engineer you will most likely be taking multiple measurements,

i.e. repeated sample experiments. Single sample experiments are more uncommon and typically occur when the measurement you are making is extremely difficult and multiple experiments are non realistic to conduct, typically due to economic or practicality reasons.

The total uncertainty \(U_x\) in a measurement of x is

\begin{equation}

U_{x} = \sqrt{B_{x}^2 + P_{x}^2}

\end{equation}

where \(B_x\) if the bias uncertainty in a measurement of x and \(P_x\) is the precision uncertainty. Note that x is just a variable and we can talk about uncertainty in measuring volume (V), pressure (P), stress (σ), etc.

This is a largely empirically derived formula however it does depend on the assump-tion that the bias and precision uncertainty are independent sources of error. Also the bias and precision uncertainty should have the same confidence level when calculating the total uncertainty. We will come back to this a bit later in excruciating detail for this calculation so please be patient.

Minimizing Error in Designing Experiments

Best time to minimize experimental error is in the design stage. You should perform a single-sample uncertainty analysis of the proposed experimental arrangement prior to building the apparatus. This allows one to make a decision on whether the expected uncertainty is acceptable and to identify the sources of uncertainty. So when designing an experiment:

• Avoid approaches that require two large numbers to be measured to determine the small difference between them

• Design experiments or sensors that amplify the signal strength to improve sensitivity

• Build null designs in which the output is measured as a change from zero rather than as a change in nonzero value

• Avoid experiments with large correction factors must be applied as part of the data-reduction procedure

• Attempt to minimize the influence of the measuring system on the measured variable

• Calibrate entire system to minimize calibration related bias errors.

Once we have established the error and uncertainty with a measurement we also have to report these results and explain our analysis. Technical writing is a very distinct class of writing with many different formats and audiences. It can be difficult to strike the right tone and level of technical information that must be provided at times. In this class we will focus on Laboratory Reports but as you can see below there are many different form of technical writing.

Forms of Technical Writing:

• Executive summary

– Audience is a supervisor. Summary should be concise, usually only a few paragraphs, and highlight key aspects of work in first paragraph.

– Consequences can be drastic as in the case of the Challenger Shuttle tragedy.

• Laboratory note or technical memo

– Limited audience, immediate supervisor

– Sufficient information should be included so the experimentalist can re- construct the experiment

• Progress report

– Audience is supervisor of sponsoring agency

– Can vary in terms of length, structure, timelines, etc.

• Full technical report

– Wider audience typically not people closely associated with the work

– Relates all facts pertinent to project

– Contains enough detail so another professional can repeat experiments

• Full technical report

– Wider audience typically not people closely associated with the work

– Relates all facts pertinent to project

– Contains enough detail so another professional can repeat experiments

Line Fitting: Identify Outliers and Physical Interpretation

When you are fitting a curve or a line to a data set one must be careful to select a fit that is appropriate for the experiment being conducted. Often researchers are too consumed with obtaining a high R2 value. While the goodness of a fit is important you must make sure that the equation that you are fitting matches with the physical experiment that you are conducting. For example if you are measuring how an electrochemical cell voltage changes as a function of temperature you must use the Nernst Equation. Looking at the data perhaps a 12th degree polynomial fit will give you a better R2 value but that equation has no physical meaning in terms of your analysis. So always keep that in mind.

When identifying outliers and a particular point is in doubt, temporarily exclude the data point and fit a new line through the remaining data. If the fit is better the point can possibly be dropped however it could indicate that the relation- ship is not linear it may indicate another scaling regime or a transition in behavior.

We can also apply this same error analysis procedure and to optimize your experimental apparatus and minimize error/total uncertainty. One can identify what component or part of the measuring process is generating the most error and then one can re-design the apparatus to minimize that error.