2: Boolen Algebra - blah blah

- Page ID

- 114085

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\dsum}{\displaystyle\sum\limits} \)

\( \newcommand{\dint}{\displaystyle\int\limits} \)

\( \newcommand{\dlim}{\displaystyle\lim\limits} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\(\newcommand{\longvect}{\overrightarrow}\)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\(\newcommand{\avec}{\mathbf a}\) \(\newcommand{\bvec}{\mathbf b}\) \(\newcommand{\cvec}{\mathbf c}\) \(\newcommand{\dvec}{\mathbf d}\) \(\newcommand{\dtil}{\widetilde{\mathbf d}}\) \(\newcommand{\evec}{\mathbf e}\) \(\newcommand{\fvec}{\mathbf f}\) \(\newcommand{\nvec}{\mathbf n}\) \(\newcommand{\pvec}{\mathbf p}\) \(\newcommand{\qvec}{\mathbf q}\) \(\newcommand{\svec}{\mathbf s}\) \(\newcommand{\tvec}{\mathbf t}\) \(\newcommand{\uvec}{\mathbf u}\) \(\newcommand{\vvec}{\mathbf v}\) \(\newcommand{\wvec}{\mathbf w}\) \(\newcommand{\xvec}{\mathbf x}\) \(\newcommand{\yvec}{\mathbf y}\) \(\newcommand{\zvec}{\mathbf z}\) \(\newcommand{\rvec}{\mathbf r}\) \(\newcommand{\mvec}{\mathbf m}\) \(\newcommand{\zerovec}{\mathbf 0}\) \(\newcommand{\onevec}{\mathbf 1}\) \(\newcommand{\real}{\mathbb R}\) \(\newcommand{\twovec}[2]{\left[\begin{array}{r}#1 \\ #2 \end{array}\right]}\) \(\newcommand{\ctwovec}[2]{\left[\begin{array}{c}#1 \\ #2 \end{array}\right]}\) \(\newcommand{\threevec}[3]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \end{array}\right]}\) \(\newcommand{\cthreevec}[3]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \end{array}\right]}\) \(\newcommand{\fourvec}[4]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \\ #4 \end{array}\right]}\) \(\newcommand{\cfourvec}[4]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \\ #4 \end{array}\right]}\) \(\newcommand{\fivevec}[5]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \\ #4 \\ #5 \\ \end{array}\right]}\) \(\newcommand{\cfivevec}[5]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \\ #4 \\ #5 \\ \end{array}\right]}\) \(\newcommand{\mattwo}[4]{\left[\begin{array}{rr}#1 \amp #2 \\ #3 \amp #4 \\ \end{array}\right]}\) \(\newcommand{\laspan}[1]{\text{Span}\{#1\}}\) \(\newcommand{\bcal}{\cal B}\) \(\newcommand{\ccal}{\cal C}\) \(\newcommand{\scal}{\cal S}\) \(\newcommand{\wcal}{\cal W}\) \(\newcommand{\ecal}{\cal E}\) \(\newcommand{\coords}[2]{\left\{#1\right\}_{#2}}\) \(\newcommand{\gray}[1]{\color{gray}{#1}}\) \(\newcommand{\lgray}[1]{\color{lightgray}{#1}}\) \(\newcommand{\rank}{\operatorname{rank}}\) \(\newcommand{\row}{\text{Row}}\) \(\newcommand{\col}{\text{Col}}\) \(\renewcommand{\row}{\text{Row}}\) \(\newcommand{\nul}{\text{Nul}}\) \(\newcommand{\var}{\text{Var}}\) \(\newcommand{\corr}{\text{corr}}\) \(\newcommand{\len}[1]{\left|#1\right|}\) \(\newcommand{\bbar}{\overline{\bvec}}\) \(\newcommand{\bhat}{\widehat{\bvec}}\) \(\newcommand{\bperp}{\bvec^\perp}\) \(\newcommand{\xhat}{\widehat{\xvec}}\) \(\newcommand{\vhat}{\widehat{\vvec}}\) \(\newcommand{\uhat}{\widehat{\uvec}}\) \(\newcommand{\what}{\widehat{\wvec}}\) \(\newcommand{\Sighat}{\widehat{\Sigma}}\) \(\newcommand{\lt}{<}\) \(\newcommand{\gt}{>}\) \(\newcommand{\amp}{&}\) \(\definecolor{fillinmathshade}{gray}{0.9}\) blah blah blah. So far we have discussed how to write and interpret propositions. This section deals with manipulating them. For this, we need algebra. Ordinary algebra, of the sort taught in high school, is about manipulating numbers, variables that represent numbers, and operators such as \(+\) and \(×\) that apply to numbers. Now, we need an algebra that applies to logical values, propositional variables, and logical operators. The first person to think of logic in terms of algebra was the mathematician, George Boole, who introduced the idea in a book that he published in 1854. The algebra of logic is now called Boolean algebra in his honor.

blah blah blah. So far we have discussed how to write and interpret propositions. This section deals with manipulating them. For this, we need algebra. Ordinary algebra, of the sort taught in high school, is about manipulating numbers, variables that represent numbers, and operators such as \(+\) and \(×\) that apply to numbers. Now, we need an algebra that applies to logical values, propositional variables, and logical operators. The first person to think of logic in terms of algebra was the mathematician, George Boole, who introduced the idea in a book that he published in 1854. The algebra of logic is now called Boolean algebra in his honor.

The algebra of numbers includes a large number of rules for manipu- lating expressions. The distributive law, for example, says that \(x(y + z) =xy + xz\), where \(x, y,\) and \(z\) are variables that stand for any numbers or numerical expressions. This law means that whenever you see something of the form \(xy + xz\) in a numerical expression, you can substitute \(x(y + z)\) without changing the value of the expression, and vice versa. Note that the equals sign in \(x(y + z) = xy + xz\) means “has the same value as, no matter what numerical values \(x, y,\) and \(z\) have.”

In Boolean algebra, we work with logical values instead of numerical values. There are only two logical values, true and false. We will write these values as \(\mathbb{T}\) and \(\mathbb{F}\). The symbols \(\mathbb{T}\) and \(\mathbb{F}\) play a similar role in Boolean algebra to the role that constant numbers such as 1 and 3.14159 play in ordinary algebra. Instead of the equals sign, Boolean algebra uses logical equivalence, ≡, which has essentially the same meaning.4 For example, for propositions p, q, and r, the ≡ operator in \(p ∧ (q ∧ r) ≡ (p ∧ q) ∧ r\) means “has the same value as, no matter what logical values \(p, q,\) and \(r\) have.”

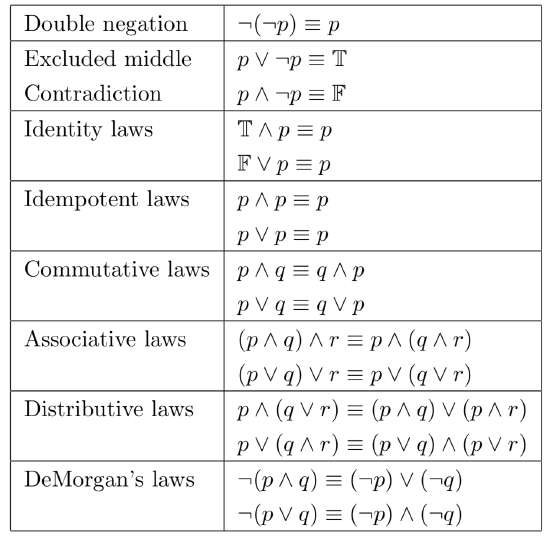

Many of the rules of Boolean algebra are fairly obvious, if you think a bit about what they mean. Even those that are not obvious can be verified easily by using a truth table. Figure 1.2 lists the most important of these laws. You will notice that all these laws, except the first, come in pairs: Each law in the pair can be obtained from the other by interchanging ∧ with ∨ and \(\mathbb{T}\) with \(\mathbb{F}\). This cuts down on the number of facts you have to remember.\(^5\)

Figure 1.2: Laws of Boolean Algebra. These laws hold for any propositions \(p, q\), and \(r\).

\(^5\)It is also an example of a more general fact known as duality, which asserts that given any tautology that uses only the operators ∧, ∨, and ¬, another tautology can be obtained from it by interchanging ∧ with ∨ and \(\mathbb{T}\) with \(\mathbb{F}\). We won’t attempt to prove this here.

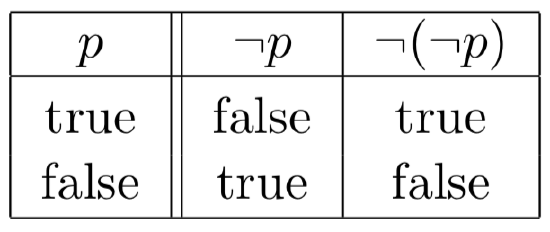

Just as an example, let’s verify the first rule in the table, the Law of Double Negation. This law is just the old, basic grammar rule that two negatives make a positive. Although this rule is questionable as it applies to English as it is actually used—no matter what the grammarian says, “I can’t get no satisfaction” doesn’t really mean “I can get satisfaction”— the validity of the rule in logic can be verified just by computing the two possible cases: when \(p\) is true and when \(p\) is false. When p is true, then by the definition of the ¬ operator, \(¬p\) is false. But then, again by the definition of ¬, the value of \(¬(¬p)\) is true, which is the same as the value of \(p\). Similarly, in the case where \(p\) is false, \(¬(¬p)\) is also false. Organized into a truth table, this argument takes the rather simple form

The fact that the first and last columns are identical shows the logical equivalence of \(p\) and \(¬(¬p)\). The point here is not just that \(¬(¬p) ≡ p\), but also that this logical equivalence is valid because it can be verified computationally based just on the relevant definitions. Its validity does not follow from the fact that “it’s obvious” or “it’s a well-known rule of grammar.” Students often ask “Why do I have to prove something when it’s obvious.” The point is that logic—and mathematics more generally—is its own little world with its own set of rules. Although this world is related somehow to the real world, when you say that something is obvious (in the real world), you aren’t playing by the rules of the world of logic. The real magic of mathematics is that by playing by its rules, you can come up with things that are decidedly not obvious, but that still say something about the real world—often, something interesting and important.

Each of the rules in Figure 1.2 can be verified in the same way, by making a truth table to check all the possible cases.

It’s important to understand that the propositional variables in the laws of Boolean algebra can stand for any propositions, including compound propositions. It is not just true, as the Double Negation Law states, that \(¬(¬p) ≡ p\). It is also true that \(¬(¬q) ≡ q\), that \(¬(¬(p∧q)) ≡ (p∧q)\), that \(¬(¬(p → (q ∧ ¬p))) ≡ (p → (q ∧ ¬p))\), and an infinite number of other statements of the same form. Here, a “statement of the same form” is one that can be obtained by substituting something for p in both places where it occurs in \(¬(¬p) ≡ p\). How can I be sure that all these infinitely many statements are valid when all that I’ve checked is one little two-line truth table? The answer is that any given proposition, Q, no matter how complicated, has a particular truth value, either true or false. So, the question of the validity of \(¬(¬Q) ≡ Q\) always reduces to one of the two cases I already checked in the truth table. (Note that for this argument to be valid, the same Q must be substituted for p in every position where it occurs.) While this argument may be “obvious,” it is not exactly a proof, but for now we will just accept the validity of the following theorem:

Theorem 1.1 First Substitution Law

Suppose that \(Q\) is any proposition, and that \(p\) is a propositional variable. Consider any tautology. If (\(Q)\) is substituted for \(p\) in all places where \(p\) occurs in the tautology, then the result is also a tautology.

Since logical equivalence is defined in terms of tautology, it is also true that when \((Q)\) is substituted for p in a logical equivalence, the result is again a logical equivalence.\(^6\)

The First Substitution Law lets you do algebra! For example, you can substitute \(p → q\) for \(p\) in the law of double negation, \(¬(¬p) ≡ p\). This al- lows you to “simplify” the expression \(¬(¬(p → q)) to p → q\) with confidence that the resulting expression has the same logical value as the expression you started with. (That’s what it means for \(¬(¬(p → q))\) and \(p → q\) to be logically equivalent.) You can play similar tricks with all the laws in Figure 1.2. Even more important is the Second Substitution Law, which says that you can substitute an expression for a logically equivalent expression, wherever it occurs. Once again, we will accept this as a theorem without trying to prove it here. It is surprisingly hard to put this law into words:

Theorem 1.2 Second Substitution Law

Suppose that \(P\) and \(Q\) are any propositions such that \(P ≡ Q\). Suppose that \(R\) is any compound proposition in which \((P)\) occurs as a subproposition. Let \(R′\) be the proposition that is obtained by substituting \((Q)\) for that occurrence of \((P)\) in \(R\). Then \(R ≡ R′\).

Note that in this case, the theorem does not require \((Q)\) to be substituted for every occurrence of \((P)\) in \(R\). You are free to substitute for one, two, or as many occurrences of \((P)\) as you like, and the result is still logically equivalent to \(R\).

The Second Substitution Law allows us to use the logical equivalence \(¬(¬p) ≡ p\) to “simplify” the expression \(q → (¬(¬p))\) by substituting \((p)\) for \((¬(¬p))\). The resulting expression, \(q → (p)\), or just \(q → p\) without the parentheses, is logically equivalent to the original \(q → (¬(¬p))\). Once again, we have to be careful about parentheses: The fact that \(p ∨ p ≡ p\)does not allow us to rewrite \(q∧p∨p∧r\) as \(q∧p∧r\). The problem is that \(q∧p∨p∧r\) means \((q∧p)∨(p∧r)\), so that \((p∨p)\) is not a subexpression. So even though in practice we won’t always write all the parentheses, you always have to be aware of where the parentheses belong.

\(^6\)I’ve added parentheses around \(Q\) here for technical reasons. Sometimes, the parentheses are necessary to make sure that \(Q\) is evaluated as a whole, so that its final value is used in place of \(p\). As an example of what can go wrong, consider \(q ∧ r\). If this is substituted literally for \(p\) in \(¬(¬p)\), without parentheses, the result is \(¬(¬q ∧ r)\). But this expression means \(¬((¬q) ∧ r)\), which is not equivalent to \(q ∧ r\).

Substitution Law: The equivalence \(Q ≡ R\) allows us to substitute \(R\) for \(Q\) in the statement \(P ≡ Q\), giving \(P ≡ R\). (Remember that, by Definition 1.4, logical equivalence is defined in terms of a proposition.) This means that we can show that two compound propositions are logically equivalent by finding a chain of logical equivalences that lead from one to the other. For example:

\(p ∧ (p → q) ≡ p ∧ (¬p ∨ q)\) definition of \(p → q\), Theorem 1.2

\(≡ (p ∧ ¬p) ∨ (p ∧ q)\) Distributive Law

\(≡ \mathbb{T} ∨ (p ∧ q)\) Law of Contradiction, Theorem 1.2

\(≡ (p ∧ q)\) Identity Law

Each step in the chain has its own justification. In several cases, a substitution law is used without stating as much. In the first line, for example, the definition of \(p → q\) is that \(p → q ≡ ¬p ∨ q\). The Second Substitution Law allows us to substitute \((¬p ∨ q)\) for \((p → q)\). In the last line, we implicitly applied the First Substitution Law to the Identity Law, \(\mathbb{T} ∨ p ≡ p\), to obtain \(\mathbb{F} ∨ (p ∧ q) ≡ (p ∧ q)\).

The chain of equivalences in the above example allows us to conclude that \(p ∧ (p → q)\) is logically equivalent to \(p ∧ q\). This means that if you were to make a truth table for these two expressions, the truth values in the column for \(p ∧ (p → q)\) would be identical to those in the column for \(p ∧ q\). We know this without actually making the table. In this case, the table would only be four lines long and easy enough to make. But Boolean algebra can be applied in cases where the number of propositional variables is too large for a truth table to be practical.

Let’s do another example. Recall that a compound proposition is a tautology if it is true for all possible combinations of truth values of the propositional variables that it contains. But another way of saying the same thing is that \(P\) is a tautology if \(P ≡ T\). So, we can prove that a compound proposition, \(P\), is a tautology by finding a chain of logical equivalences leading from \(P to T\). For example:

\(((p ∨ q) ∧ ¬p) → q\)

\(≡ (¬((p ∨ q) ∧ ¬p)) ∨ q\) definition of →

\(≡ (¬(p ∨ q) ∨ ¬(¬p)) ∨ q\) DeMorgan’s Law, Theorem 1.2

\(≡ (¬(p ∨ q) ∨ p) ∨ q\) Double Negation, Theorem 1.2

\(≡ (¬(p ∨ q)) ∨ (p ∨ q)\) Associative Law for ∨

\(≡T\) Law of Excluded Middle

From this chain of equivalences, we can conclude that \(((p ∨ q) ∧ ¬p) → q\) is a tautology.

Now, it takes some practice to look at an expression and see which rules can be applied to it; to see \((¬(p ∨ q)) ∨ (p ∨ q)\) as an application of the law of the excluded middle for example, you need to mentally substitute \((p ∨ q)\) for p in the law as it is stated in Figure 1.2. Often, there are several rules that apply, and there are no definite guidelines about which one you should try. This is what makes algebra something of an art.

It is certainly not true that all possible rules of Boolean algebra are given in Figure 1.2. For one thing, there are many rules that are easy consequences of the rules that are listed there. For example, although the table asserts only that \(\mathbb{F} ∨ p ≡ p\), it is also true that \(p ∨ \mathbb{F} ≡ p\). This can be checked directly or by a simple calculation:

\(p ∨ \mathbb{F} ≡ \mathbb{F} ∨ p\) Commutative Law

\(≡ p\) Identity Law as given in the table

Additional rules can be obtained by applying the Commutative Law to other rules in the table, and we will use such rules freely in the future.

Another sort of easy extension can be applied to the Associative Law, \((p∨q)∨r ≡ p∨(q∨r)\). The law is stated for the ∨ operator applied to three terms, but it generalizes to four or more terms. For example

\(((p∨q)∨r)∨s\)

\(≡ (p ∨ q) ∨ (r ∨ s)\) by the Associative Law for three terms

\(≡ p ∨ (q ∨ (r ∨ s))\) by the Associative Law for three terms

We will, of course, often write this expression as \(p ∨ q ∨ r ∨ s\), with no parentheses at all, knowing that wherever we put the parentheses the value is the same.

One other thing that you should keep in mind is that rules can be applied in either direction. The Distributive Law, for example, allows you to distribute the \(p\) in \(p∨(q∧¬p) \) to get \((p∨q)∧(p∨¬p)\). But it can also be used in reverse to “factor out” a term, as when you start with \((q∨(p → q))∧(q∨(q → p))\) and factor out the \(q\) to get \(q∨((p → q)∧(q → p))\).

So far in this section, I have been working with the laws of Boolean alge- bra without saying much about what they mean or why they are reasonable. Of course, you can apply the laws in calculations without understanding them. But if you want to figure out which calculations to do, you need some understanding. Most of the laws are clear enough with a little thought. For example, if we already know that q is false, then \(p ∨ q\) will be true when \(p\) is true and false when p is false. That is, \(p ∨ \mathbb{F}\) has the same logical value as \(p\). But that’s just what the Identity Law for \(∨\) says. A few of the laws need more discussion.

The Law of the Excluded Middle, \(p ∨ ¬p ≡ \mathbb{T}\), says that given any proposition \(p\), at least one of \(p\) or \(¬p\) must be true. Since \(¬p\) is true exactly when p is false, this is the same as saying that p must be either true or false. There is no middle ground.(^7) The Law of Contradiction, \(p ∧ ¬p ≡ \mathbb{F}\), says that it is not possible for both \(p\) and \(¬p\) to be true. Both of these rules are obvious.

The Distributive Laws cannot be called obvious, but a few examples can show that they are reasonable. Consider the statement, “This card is the ace of spades or clubs.” Clearly, this is equivalent to “This card is the ace of spaces or this card is the ace of clubs.” But this is just an example of the first distributive law! For, let a represent the proposition “This card is an ace,” let s represent “This card is a spade,” and let c represent “This card is a club.” Then “This card is the ace of spades or clubs” can be translated into logic as \(a ∧ (s ∨ c)\), while “This card is the ace of spades or this card is the ace of clubs” becomes \((a ∧ s) ∨ (a ∧ c)\). And the distributive law assures us that \(a∧(s∨c)≡(a∧s)∨(a∧c)\). The second distributive law tells us, for example, that “This card is either a joker or is the ten of diamonds” is logically equivalent to “This card is either a joker or a ten, and it is either a joker or a diamond.” That is, \(j ∨(t∧d) ≡ (j ∨t)∧(j ∨d)\). The distributive laws are powerful tools and you should keep them in mind whenever you are faced with a mixture of \(∧\) and \(∨\) operators.

\(^7)In propositional logic, this is easily verified with a small truth table. But there is a surprising amount of argument about whether this law is valid in all situations. In the real world, there often seems to be a gray area between truth and falsity. Even in mathematics, there are some people who think there should be a third truth value, one that means something like “unknown” or “not proven.” But the mathematicians who think this way tend to be considered a bit odd by most other mathematicians.

DeMorgan’s Laws must also be less than obvious, since people often get them wrong. But they do make sense. When considering \(¬(p ∧ q)\), you should ask yourself, how can \(“p and q”\) fail to be true. It will fail to be true if either \(p\) is false or if \(q\) is false (or both). That is, \(¬(p ∧ q)\) is equivalent to \((¬p)∨(¬q)\). Consider the sentence “A raven is large and black.” If a bird is not large and black, then it is not a raven. But what exactly does it mean to be “not (large and black)”? How can you tell whether the assertion “not (large and black)” is true of something? This will be true if it is either not large or not black. (It doesn’t have to be both—it could be large and white, it could be small and black.) Similarly, for \(“p or q”\) to fail to be true, both \(p\) and \(q\) must be false. That is, \(¬(p ∨ q)\) is equivalent to \((¬p) ∧ (¬q)\). This is DeMorgan’s second law.

Recalling that \(p → q\) is equivalent to \((¬p)∨q\), we can apply DeMorgan’s law to obtain a formula for the negation an implication:

\(¬(p → q) ≡ ¬((¬p) ∨ q)\)

\(≡ (¬(¬p)) ∧ (¬q)\)

\(≡ p ∧ ¬q\)

That is, \(p → q\) is false exactly when both \(p\) is true and \(q\) is false. For example, the negation of “If you have an ace, you win” is “You have an ace, and you don’t win.” Think of it this way: if you had an ace and you didn’t win, then the statement “If you have an ace, you win” was not true.

Exercises

- Construct truth tables to demonstrate the validity of each of the distributive laws.

- Construct the following truth tables:

a) Construct truth tables to demonstrate that \(¬(p ∧ q)\) is not logically

equivalent to \((¬p) ∧ (¬q)\).

b) Construct truth tables to demonstrate that \(¬(p ∨ q)\) is not logically equivalent to \((¬p) ∨ (¬q)\).

c) Construct truth tables to demonstrate the validity of both DeMorgan’s Laws. - Construct truth tables to demonstrate that ¬(p → q) is not logically equiva- lent to any of the following.

a) \((¬p) → (¬q)\)

b) \((¬p) → q\)

c) \(p → (¬q)\)

Refer back to this section for a formula that is logically equivalent to ¬(p → q). - Is \(¬(p ↔ q)\) logically equivalent to \((¬p) ↔ (¬q)\)?

- In the algebra of numbers, there is a distributive law of multiplication over addition: \(x(y + z) = xy + xz\). What would a distributive law of addition over multiplication look like? Is it a valid law in the algebra of numbers?

- The distributive laws given in Figure 1.2 are sometimes called the left distributive laws. The right distributive laws say that \((p∨q)∧r ≡ (p∧r)∨(q∧r) \) and that \((p∧q)∨r ≡ (p∨r)∧(q∨r)\). Show that the right distributive laws are also valid laws of Boolean algebra. (Note: In practice, both the left and the right distributive laws are referred to simply as the distributive laws, and both can be used freely in proofs.)

- Show that \(p∧(q∨r∨s) ≡ (p∧q)∨(p∧r)∨(p∧s)\) for any propositions \(p, q, r,\) and \(s\). In words, we can say that conjunction distributes over a disjunction of three terms. (Recall that the \(∧\) operator is called conjunction and \(∨\) is called disjunction.) Translate into logic and verify the fact that conjunction distributes over a disjunction of four terms. Argue that, in fact, conjunction distributes over a disjunction of any number of terms.

- There are two additional basic laws of logic, involving the two expression \(p∧\mathbb{F} and \(p ∨ \mathbb{T}\). What are the missing laws? Show that your answers are, in fact, laws.

- For each of the following pairs of propositions, show that the two propositions are logically equivalent by finding a chain of equivalences from one to the other. State which definition or law of logic justifies each equivalence in the chain.

a) \(p∧(q∧p), p∧q\)

b) \((¬p)→q, p∨q\)

c) \((p∨q)∧¬q, p∧¬q\)

d) \(p→(q→r), (p∧q)→r\)

e) \((p→r)∧(q→r), (p∨q)→r\)

f) \(p→(p∧q), p→q\) - For each of the following compound propositions, find a simpler proposition that is logically equivalent. Try to find a proposition that is as simple as possible.

a) \((p∧q)∨¬q\)

b) \(¬(p∨q)∧p\)

c) \(p → ¬p\)

d) \(¬p∧(p∨q)\)

e) \((q∧p)→q\)

f) \((p→q)∧(¬p→q)\) - Express the negation of each of the following sentences in natural English:

a) It is sunny and cold.

b) I will have cake or I will have pie.

c) If today is Tuesday, this is Belgium.

d) If you pass the final exam, you pass the course. - Apply one of the laws of logic to each of the following sentences, and rewrite it as an equivalent sentence. State which law you are applying.

a) I will have coffee and cake or pie.

b) He has neither talent nor ambition.

c) You can have spam, or you can have spam.