7.4: Framebuffers

- Page ID

- 13748

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\(\newcommand{\avec}{\mathbf a}\) \(\newcommand{\bvec}{\mathbf b}\) \(\newcommand{\cvec}{\mathbf c}\) \(\newcommand{\dvec}{\mathbf d}\) \(\newcommand{\dtil}{\widetilde{\mathbf d}}\) \(\newcommand{\evec}{\mathbf e}\) \(\newcommand{\fvec}{\mathbf f}\) \(\newcommand{\nvec}{\mathbf n}\) \(\newcommand{\pvec}{\mathbf p}\) \(\newcommand{\qvec}{\mathbf q}\) \(\newcommand{\svec}{\mathbf s}\) \(\newcommand{\tvec}{\mathbf t}\) \(\newcommand{\uvec}{\mathbf u}\) \(\newcommand{\vvec}{\mathbf v}\) \(\newcommand{\wvec}{\mathbf w}\) \(\newcommand{\xvec}{\mathbf x}\) \(\newcommand{\yvec}{\mathbf y}\) \(\newcommand{\zvec}{\mathbf z}\) \(\newcommand{\rvec}{\mathbf r}\) \(\newcommand{\mvec}{\mathbf m}\) \(\newcommand{\zerovec}{\mathbf 0}\) \(\newcommand{\onevec}{\mathbf 1}\) \(\newcommand{\real}{\mathbb R}\) \(\newcommand{\twovec}[2]{\left[\begin{array}{r}#1 \\ #2 \end{array}\right]}\) \(\newcommand{\ctwovec}[2]{\left[\begin{array}{c}#1 \\ #2 \end{array}\right]}\) \(\newcommand{\threevec}[3]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \end{array}\right]}\) \(\newcommand{\cthreevec}[3]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \end{array}\right]}\) \(\newcommand{\fourvec}[4]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \\ #4 \end{array}\right]}\) \(\newcommand{\cfourvec}[4]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \\ #4 \end{array}\right]}\) \(\newcommand{\fivevec}[5]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \\ #4 \\ #5 \\ \end{array}\right]}\) \(\newcommand{\cfivevec}[5]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \\ #4 \\ #5 \\ \end{array}\right]}\) \(\newcommand{\mattwo}[4]{\left[\begin{array}{rr}#1 \amp #2 \\ #3 \amp #4 \\ \end{array}\right]}\) \(\newcommand{\laspan}[1]{\text{Span}\{#1\}}\) \(\newcommand{\bcal}{\cal B}\) \(\newcommand{\ccal}{\cal C}\) \(\newcommand{\scal}{\cal S}\) \(\newcommand{\wcal}{\cal W}\) \(\newcommand{\ecal}{\cal E}\) \(\newcommand{\coords}[2]{\left\{#1\right\}_{#2}}\) \(\newcommand{\gray}[1]{\color{gray}{#1}}\) \(\newcommand{\lgray}[1]{\color{lightgray}{#1}}\) \(\newcommand{\rank}{\operatorname{rank}}\) \(\newcommand{\row}{\text{Row}}\) \(\newcommand{\col}{\text{Col}}\) \(\renewcommand{\row}{\text{Row}}\) \(\newcommand{\nul}{\text{Nul}}\) \(\newcommand{\var}{\text{Var}}\) \(\newcommand{\corr}{\text{corr}}\) \(\newcommand{\len}[1]{\left|#1\right|}\) \(\newcommand{\bbar}{\overline{\bvec}}\) \(\newcommand{\bhat}{\widehat{\bvec}}\) \(\newcommand{\bperp}{\bvec^\perp}\) \(\newcommand{\xhat}{\widehat{\xvec}}\) \(\newcommand{\vhat}{\widehat{\vvec}}\) \(\newcommand{\uhat}{\widehat{\uvec}}\) \(\newcommand{\what}{\widehat{\wvec}}\) \(\newcommand{\Sighat}{\widehat{\Sigma}}\) \(\newcommand{\lt}{<}\) \(\newcommand{\gt}{>}\) \(\newcommand{\amp}{&}\) \(\definecolor{fillinmathshade}{gray}{0.9}\)The term “frame buffer” traditionally refers to the region of memory that holds the color data for the image displayed on a computer screen. In WebGL, a framebuffer is a data structure that organizes the memory resources that are needed to render an image. A WebGL graphics context has a default framebuffer, which is used for the image that appears on the screen. The default framebuffer is created by the call to canvas.getContext() that creates the graphics context. Its properties depend on the options that are passed to that function and cannot be changed after it is created. However, additional framebuffers can be created, with properties controlled by the WebGL program. They can be used for off-screen rendering, and they are required for certain advanced rendering algorithms.

A framebuffer can use a color buffer to hold the color data for an image, a depth buffer to hold a depth value for each pixel, and something called a stencil buffer (which is not covered in this textbook). The buffers are said to be “attached” to the framebuffer. For a non-default framebuffer, buffers can be attached and detached by the WebGL program at any time. A framebuffer doesn’t need a full set of three buffers, but you need a color buffer, a depth buffer, or both to be able to use the framebuffer for rendering. If the depth test is not enabled when rendering to the framebuffer, then no depth buffer is needed. And some rendering algorithms, such as shadow mapping (Subsection 5.3.3) use a framebuffer with a depth buffer but no color buffer.

The rendering functions gl.drawArrays() and gl.drawElements() affect the current framebuffer, which is initially the default framebuffer. The current framebuffer can be changed by calling

gl.bindFramebuffer( gl.FRAMEBUFFER, frameBufferObject );

The first parameter to this function is always gl.FRAMEBUFFER. The second parameter can be null to select the default framebuffer for drawing, or it can be a non-default framebuffer created by the function gl.createFramebuffer(), which will be discussed below.

Framebuffer Operations

Before we get to examples of using non-default framebuffers, we look at some WebGL settings that affect rendering into whichever framebuffer is current. Examples that we have already seen include the clear color, which is used to fill the color buffer when gl.clear() is called, and the enabled state of the depth test.

Another example that affects the use of the depth buffer is the depth mask, a boolean value that controls whether values are written to the depth buffer during rendering. (The enabled state of the depth test determines whether values from the depth buffer are used during rendering; the depth mask determines whether new values are written to the depth buffer.) Writing to the depth buffer can be turned off with the command

gl.depthMask( false );

and can be turned back on by calling gl.depthMask(true). The default value is true.

One example of using the depth mask is for rendering translucent geometry. When some of the objects in a scene are translucent, then all of the opaque objects should be rendered first, followed by the translucent objects. (Suppose that you rendered a translucent object, and then rendered an opaque object that lies behind the translucent object. The depth test would cause the opaque object to be hidden by the translucent object. But “translucent” means that the opaque object should be visible through the translucent object. So it’s important to render all the opaque objects first.) And it’s important to turn off writing to the depth buffer, by calling gl.depthMask(false), while rendering the translucent objects. The reason is that a translucent object that is drawn behind another translucent object should be visible through the front object. Note, however, that the depth test must still be enabled while the translucent objects are being rendered, since a translucent object can be hidden by an opaque object. Also, alpha blending must be on while rendering the translucent objects.

For fully correct rendering of translucent objects, the translucent primitives should be sorted into back-to-front order before rendering, as in the painter’s algorithm (Subsection 3.1.4). However, that can be difficult to implement, and acceptable results can sometimes be obtained by rendering the translucent primitives in arbitrary order (but still after the opaque primitives). In fact that was done in the demos c3/rotation-axis.html from Subsection 3.2.2 and c3/transform-equivalence-3d.html from Subsection 3.3.4.

It is also possible to control writing to the color buffer, using the color mask. The color buffer has four “channels” corresponding to the red, green, blue, and alpha components of the color. Each channel can be controlled separately. You could, for example, allow writing to the red and alpha color channels, while blocking writing to the green and blue channels. That would be done with the command

gl.colorMask( true, false, false, true );

The colorMask function takes four parameters, one for each color channel. A true value allows writing to the channel; a false value blocks writing. When writing is blocked for a channel during rendering, the value of the corresponding color component is simply ignored.

One use of the color mask is for anaglyph stereo rendering (Subsection 5.3.1). An anaglyph stereo image contains two images of the scene, one intended for the left eye and one for the right eye. One image is drawn using only shades of red, while the other uses only combinations of green and blue. The two images are drawn from slightly different viewpoints, corresponding to the views from the left and the right eye. So the algorithm for anaglyph stereo has the form

gl.clearColor(0,0,0,1); gl.clear( gl.COLOR_BUFFER_BIT | gl.DEPTH_BUFFER_BIT ); gl.colorMask( true, false, false, false ); // write to red channel only ... // set up view from left eye ... // render the scene gl.clear( gl.DEPTH_BUFFER_BIT ); // clear only the depth buffer gl.colorMask( false, true, true, false ); // write to green and blue channels ... // set up view from right eye ... // render the scene

One way to set up the views from the left and right eyes is simply to rotate the view by a few degrees about the y-axis. Note that the depth buffer, but not the color buffer, must be cleared before drawing the second image, since otherwise the depth test would prevent some parts of the second image from being written.

Finally, I would like to look at blending in more detail. Blending refers to how the fragment color from the fragment shader is combined with the current color of the fragment in the color buffer. The default, assuming that the fragment passes the depth test, is to replace the current color with the fragment color. When blending is enabled, the current color can be replaced with some combination of the current color and the fragment color. Previously, I have only discussed turning on alpha blending for transparency with the commands

gl.enable( gl.BLEND );

gl.blendFunc( gl.SRC_ALPHA, gl.ONE_MINUS_SRC_ALPHA );

The function gl.blendFunc() determines how the new color is computed from the current color and the fragment color. With the parameters shown here, the formula for the new color, using GLSL syntax, is

(src * src.a) + (dest * (1-src.a))

where src is the “source” color (that is, the color that is being written, the fragment color) and dest is the “destination” color (that is, the color currently in the color buffer, which is the destination of the rendering operation). And src.a is the alpha component of the source color. The parameters to gl.blendFunc() determine the coefficients — src.a and \( (1 - src.a) \) — in the formula. The default coefficients for the blend function are given by

gl.blendFunc( gl.ONE, gl.ZERO );

which specifies the formula

(src * 1) + (dest * 0)

That is, the new color is equal to the source color; there is no blending. Other coefficient values are possible, but I won’t use them here.

Note that blending applies to the alpha component as well as the RGB components of the color, which is probably not what you want. When drawing with a translucent color, it means that the color that is written to the color buffer will have an alpha component less than 1. When rendering to a canvas on a web page, this will make the canvas itself translucent, allowing the background of the canvas to show through. (This assumes that the WebGL context was created with an alpha channel, which is the default.) To avoid that, you can set the blend function with the alternative command

gl.blendFuncSeparate( gl.SRC_ALPHA, gl.ONE_MINUS_SRC_ALPHA, gl.ZERO, gl.ONE );

The two extra parameters specify separate coefficients to be used for the alpha component in the formula, while the first two parameters are used only for the RGB components. That is, the new color for the color buffer is computed using the formula

vec4( (src.rgb*src.a) + (dest.rgb*(1-src.a)), src.a*0 + dest.a*1 );

With this formula, the alpha component in the destination (the color buffer) remains the same as its original value.

The blend function set by gl.blendFunc(gl.ONE,gl.ONE) can sometimes be used in multi-pass algorithms. In a multi-pass algorithm, a scene is rendered several times, and the results are combined somehow to produce the final image. (Anaglyph stereo rendering is an example.) If you simply want to add up the results of the various passes, then you can fill the color buffer with zeros, enable blending, and set the blend function to (gl.ONE,gl.ONE) during rendering. As a simple example, the sample program webgl/image-blur.html uses a multi-pass algorithm to implement blurring. The scene in the example is just a texture image applied to a rectangle, so the effect is to blur the texture image. The technique involves drawing the scene nine times. In the fragment shader, the color is divided by nine. Blending is used to add the fragment colors from the nine passes, so that the final color in the color buffer is the average of the colors from the nine passes. For eight of the nine passes, the scene is offset slightly from its original position, so that the color of a pixel in the final image is the average of the colors of that pixel and the surrounding pixels from the original scene.

Render To Texture

The previous subsection applies to any framebuffer. But we haven’t yet used a non-default framebuffer. We turn to that topic now.

One use for a non-default framebuffer is to render directly into a texture. That is, the memory occupied by a texture image can be attached to the framebuffer as its color buffer, so that rendering operations will send their output to the texture image. The technique, which is called render-to-texture, is used in the sample program webgl/render-to-texture.html.

Texture memory is normally allocated when an image is loaded into the texture using the function gl.texImage2D or gl.copyTexImage2D. (See Section 6.4.) However, there is a version of gl.texImage2D that can be used to allocate memory without loading an image into that memory. Here is an example, from the sample program:

texture = gl.createTexture();

gl.bindTexture(gl.TEXTURE_2D, texture);

gl.texImage2D(gl.TEXTURE_2D, 0, gl.RGBA, 512, 512,

0, gl.RGBA, gl.UNSIGNED_BYTE, null);

It is the null parameter at the end of the last line that tells gl.texImage2D to allocate new memory without loading existing image data to fill that memory. Instead, the new memory is filled with zeros. The first parameter to gl.texImage2D is the texture target. The target is gl.TEXTURE_2D for normal textures, but other values are used for working with cubemap textures. The fourth and fifth parameters specify the height and width of the image; they should be powers of two. The other parameters usually have the values shown here; their meanings are the same as for the version of gl.texImage2D discussed in Subsection 6.4.3. Note that the texture object must first be created and bound; gl.texImage2D applies to the texture that is currently bound to the active texture unit.

To attach the texture to a framebuffer, you need to create a framebuffer object and make that object the current framebuffer by binding it. For example,

framebuffer = gl.createFramebuffer(); gl.bindFramebuffer(gl.FRAMEBUFFER, framebuffer);

Then the function gl.framebufferTexture2D can be used to attach the texture to the framebuffer:

gl.framebufferTexture2D( gl.FRAMEBUFFER, gl.COLOR_ATTACHMENT0,

gl.TEXTURE_2D, texture, 0 );

The first parameter is always gl.FRAMEBUFFER. The second parameter says a color buffer is being attached. (The last character in gl.COLOR_ATTACHMENT0 is a zero, which allows the possibility of having more than one color buffer attached to a framebuffer. In standard WebGL 1.0, only one color buffer is allowed; however, see Subsection 7.5.3) The third parameter is the same texture target that was used in gl.texImage2D, and the fourth is the texture object. The last parameter is the mipmap level; it will usually be zero, which means rendering to the texture image itself rather than to one of its mipmap images.

With this setup, you are ready to bind the framebuffer and draw to the texture. After drawing the texture, call

gl.bindFramebuffer( gl.FRAMEBUFFER, null );

to start drawing again to the default framebuffer. The texture is ready for use in subsequent rendering operations. The texture object can be bound to a texture unit, and a sampler2D variable can be used in the shader program to read from the texture. You are very likely to use different shader programs for drawing to the texture and drawing to the screen. Recall that the function gl.useProgram() is used to specify the shader program.

In the sample program, the texture is animated, and a new image is drawn to the texture for each frame of the animation. The texture image is 2D, so the depth test is disabled while rendering it. This means that the framebuffer doesn’t need a depth buffer. In outline form, the rendering function in the sample program has the form

function draw() {

/* Draw the 2D image into a texture attached to a framebuffer. */

gl.bindFramebuffer(gl.FRAMEBUFFER,framebuffer);

gl.useProgram(prog texture); // shader program for the texture

gl.clearColor(1,1,1,1);

gl.clear(gl.COLOR_BUFFER_BIT);

gl.disable(gl.DEPTH_TEST); // framebuffer doesn’t even have a depth buffer!

gl.viewport(0,0,512,512); // Viewport is not set automatically!

.

. // draw the texture image, which changes in each frame

.

gl.disable(gl.BLEND);

/* Now draw the main scene, which is 3D, using the texture. */

gl.bindFramebuffer(gl.FRAMEBUFFER,null); // Draw to default framebuffer.

gl.useProgram(prog); // shader program for the on-screen image

gl.clearColor(0,0,0,1);

gl.clear(gl.COLOR_BUFFER_BIT | gl.DEPTH_BUFFER_BIT);

gl.enable(gl.DEPTH_TEST);

gl.viewport(0,0,canvas.width,canvas.height); // Reset the viewport!

.

. // draw the scene

.

}

Note that the viewport has to be set by hand when drawing to a non-default frame buffer. It then has to be reset when drawing the on-screen image to match the size of the canvas where the on-screen image is rendered. I should also note that only one texture object is used in this program, so it can be bound once and for all during initialization. In this case, it is not necessary to call gl.bindTexture() in the draw() function.

This example could be implemented without using a framebuffer, as was done for the example in Subsection 4.3.6. In that example, the texture image was drawn to the default framebuffer, then copied to the texture object. However, the version in this section is more efficient because it does not need to copy the image after rendering it.

Renderbuffers

It is often convenient to use memory from a texture object as the color buffer for a framebuffer. However, sometimes its more appropriate to create separate memory for the buffer, not associated with any texture. For the depth buffer, that is the typical case. For such cases, the memory can be created as a renderbuffer. A renderbuffer represents memory that can be attached to a framebuffer for use as a color buffer, depth buffer, or stencil buffer. To use one, you need to create the renderbuffer and allocate memory for it. Memory is allocated using the function gl.renderbufferStorage(). The renderbuffer must be bound by calling gl.bindRenderbuffer() before allocating the memory. Here is an example that creates a renderbuffer for use as a depth buffer:

var depthBuffer = gl.createRenderbuffer(); gl.bindRenderbuffer(gl.RENDERBUFFER, depthBuffer); gl.renderbufferStorage(gl.RENDERBUFFER, gl.DEPTH_COMPONENT16, 512, 512);

The first parameter to both gl.bindRenderbuffer and gl.renderbufferStorage must be gl.RENDERBUFFER. The second parameter to gl.renderbufferStorage specifies how the renderbuffer will be used. The value gl.DEPTH_COMPONENT16 is for a depth buffer with 16 bits for each pixel. (Sixteen bits is the only option.) For a color buffer holding RGBA colors with four eight-bit values per pixel, the second parameter would be gl.RGB4. Other values are possible, such as gl.RGB565, which uses 16 bits per pixel with 5 bits for the red color channel, 6 bits for green, and 5 bits for blue. For a stencil buffer, the value would be gl.STENCIL_INDEX8. The last two parameters to gl.renderbufferStorage are the width and height of the buffer.

The function gl.framebufferRenderbuffer() is used to attach a renderbufffer to be used as one of the buffers in a framebuffer. It takes the form

gl.framebufferRenderbuffer(gl.FRAMEBUFFER, gl.DEPTH_ATTACHMENT, gl.RENDERBUFFER, renderbuffer);

The framebuffer must be bound by calling gl.bindFramebuffer before this function is called. The first and third parameters to gl.framebufferRenderbuffer must be as shown. The last parameter is the renderbuffer. The second paramter specifies how the renderbuffer will be used. It can be gl.COLOR_ATTACHMENT0, gl.DEPTH_ATTACHMENT, or gl.STENCIL_ATTACHMENT.

Dynamic Cubemap Textures

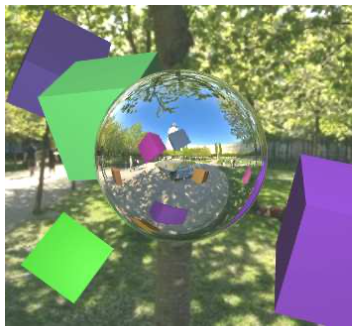

To render a 3D scene to a framebuffer, we need both a color buffer and a depth buffer. An example can be found in the sample program webgl/cube-camera.html. This example uses render-to-texture for a cubemap texture. The cubemap texture is then used as an environment map on a reflective surface. In addition to the environment map, the programs uses another cubemap texture for a skybox. (See Subsection 6.3.5.) Here’s an image from the program:

The environment in this case includes the background skybox, but also includes several colored cubes that are not part of the skybox texture. The reflective sphere in the center of the image reflects the cubes as well as the skybox, which means that the environment map texture can’t be the same as the skybox texture — it has to include the cubes. Furthermore, the scene can be animated and the cubes can move. The reflection in the sphere has to change as the cubes move. This means that the environment map texture has to be recreated in each frame. For that, we can use a framebuffer to render to the cubemap texture.

A cubemap texture consists of six images, one each for the positive and negative direction of the x, y, and z axes. Each image is associated with a different texture target (similar to gl.TEXTURE_2D). To render a cubemap, we need to allocate storage for all six sides. Here’s the code from the sample program:

cubemapTargets = [

// store texture targets in an array for convenience

gl.TEXTURE CUBE MAP POSITIVE X, gl.TEXTURE CUBE MAP NEGATIVE X,

gl.TEXTURE CUBE MAP POSITIVE Y, gl.TEXTURE CUBE MAP NEGATIVE Y,

gl.TEXTURE CUBE MAP POSITIVE Z, gl.TEXTURE CUBE MAP NEGATIVE Z

];

dynamicCubemap = gl.createTexture(); // Create the texture object.

gl.bindTexture(gl.TEXTURE_CUBE_MAP, dynamicCubemap); // bind it as a cubemap

for (i = 0; i < 6; i++) {

gl.texImage2D(cubemapTargets[i], 0, gl.RGBA, 512, 512,

0, gl.RGBA, gl.UNSIGNED BYTE, null);

}

We also need to create a framebuffer, as well as a renderbuffer for use as a depth buffer, and we need to attach the depth buffer to the framebuffer. The same framebuffer can be used to render all six images for the texture, changing the color buffer attachment of the framebuffer as needed. To attach one of the six cubemap images as the color buffer, we just specify the corresponding cubemap texture target in the call to gl.framebufferTexture2D(). For example, the command

gl.framebufferTexture2D(gl.FRAMEBUFFER, gl.COLOR_ATTACHMENT0,

gl.TEXTURE_CUBE MAP_NEGATIVE_Z, dynamicCubemap, 0);

attaches the negative z image from the texture object dynamicCubemap to be used as the color buffer in the currently bound framebuffer.

After the six texture images have been rendered, the cubemap texture is ready to be used. Aside from the fact that six 3D images are rendered instead of one 2D image, this is all very similar to the render-to-texture example from earlier in this section.

The question remains of how to render the six images of the scene that are needed for the cubemap texture. To make an environment map for a reflective object, we want images of the environment that surrounds that object. The images can be made with a camera placed at the center of the object. The basic idea is to point the camera in the six directions of the positive and negative coordinate axes and snap a picture in each direction, but it’s tricky to get the details correct. (And note that when we apply the result to a point on the surface, we will only have an approximation of the correct reflection. For a geometrically correct reflection at the point, we would need the view from that very point, not the view from the center of the object, but we can’t realistically make a different environment map for each point on the surface. The approximation will look OK as long as other objects in the scene are not too close to the reflective surface.)

A “camera” really means a projection transformation and a viewing transformation. The projection needs a ninety-degree field of view, to cover one side of the cube, and its aspect ratio will be 1, since the sides of the cube are squares. We can make the projection matrix with a glMatrix command such as

mat4.projection( projection, Math.PI/2, 1, 1, 100 );

where the last two parameters, the near and far clipping distances, should be chosen to include all the objects in the scene. If we apply no viewing transformation, the camera will be at the origin, pointing in the direction of the negative z-axis. If the reflective object is at the origin, as it is in the sample program, we can use the camera with no viewing transformation to take the negative-z image for the cubemap texture.

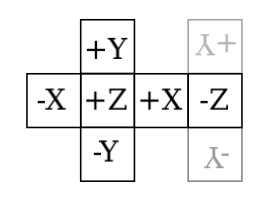

But, because of the details of how the images must be stored for cubemap textures, it turns out that we need to apply one transformation. Let’s look at the layout of images for a cubemap texture:

The six sides of the cube are shown in black, as if the sides of the cube have been opened up and laid out flat. Each side is marked with the corresponding coordinate axis direction. Duplicate copies of the plus and minus y sides are shown in gray, to show how those sides attach to the negative z side. The images that we make for the cubemap must fit together in the same way as the sides in this layout. However, the sides in the layout are viewed from the outside of the cube, while the camera will be taking a picture from the inside of the cube. To get the correct view, we need to flip the picture from the camera horizontally. After some experimentation, I found that I also need to flip it vertically, perhaps because web images are stored upside down with respect to the OpenGL convention. We can do both flips with a scaling transformation by (−1,−1,1). Putting this together, the code for making the cubemap’s negative z image is

gl.bindFramebuffer(gl.FRAMEBUFFER, framebuffer); // Draw to offscreen buffer.

gl.viewport(0,0,512,512); // Match size of the texture images.

/* Set up projection and modelview matrices for the virtual camera

mat4.perspective(projection, Math.PI/2, 1, 1, 100);

mat4.identity(modelview);

mat4.scale(modelview,modelview,[-1,-1,1]);

/* Attach the cubemap negative z image as the color buffer in the framebuffer,

and "take the picture" by rendering the image. */

gl.framebufferTexture2D(gl.FRAMEBUFFER, gl.COLOR_ATTACHMENT0,

gl.TEXTURE_CUBE_MAP_NEGATIVE_Z, dynamicCubemap, 0);

renderSkyboxAndCubes();

The function in the last line renders the scene, except for the central reflective object itself, and is responsible for sending the projection and modleview matrices to the shader programs.

For the other five images, we need to aim the camera in a different direction before taking the picture. That can be done by adding an appropriate rotation to the viewing transformation. For example, for the positive x image, we need to rotate the camera by −90 degrees about the y-axis. As a viewing transform, we need the command

mat4.rotateY(modelview, modelview, Math.PI/2);

It might be easier to think of this as a modeling transformation that rotates the positive x side of the cube into view in front of the camera.

In the sample program, the six cubemap images are created in the function createDynamicCubemap(). Read the source code of that function for the full details.

This dynamic cubemap program is a nice example, since it makes use of so many of the concepts and techniques that we have covered. You should run the program and think about everything that is going on, and how it was all implemented.