6.7: Noisy Channel Capacity Theorem

- Page ID

- 51018

The channel capacity of a noisy channel is defined in terms of the mutual information \(M\). However, in general \(M\) depends not only on the channel (through the transfer probabilities \(c_{ji}\)) but also on the input

probability distribution \(p(A_i)\). It is more useful to define the channel capacity so that it depends only on the channel, so \(M_{\text{max}}\), the maximum mutual information that results from any possible input probability distribution, is used. In the case of the symmetric binary channel, this maximum occurs when the two input probabilities are equal. Generally speaking, going away from the symmetric case offers few if any advantages in engineered systems, and in particular the fundamental limits given by the theorems in this chapter cannot be evaded through such techniques. Therefore the symmetric case gives the right intuitive understanding.

The channel capacity is defined as

\(C = M_{\text{max}}W \tag{6.29}\)

where \(W\) is the maximum rate at which the output state can follow changes at the input. Thus \(C\) is expressed in bits per second.

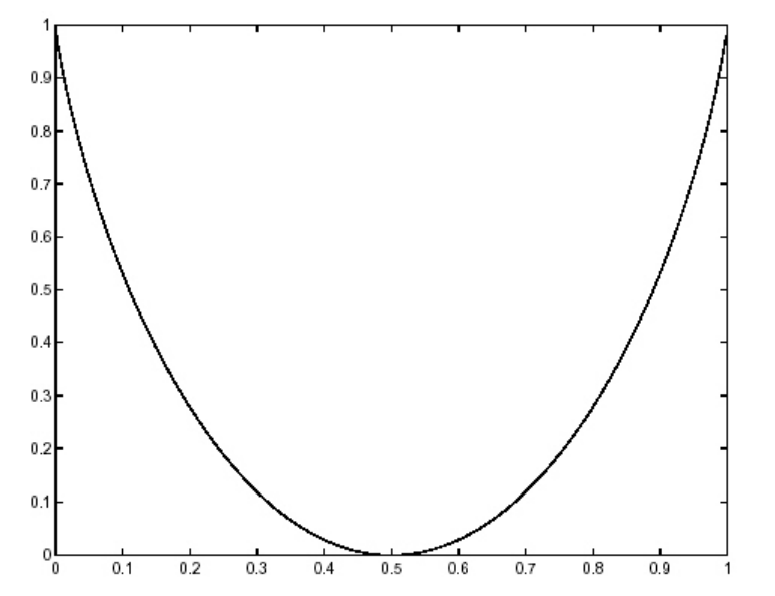

The channel capacity theorem was first proved by Shannon in 1948. It gives a fundamental limit to the rate at which information can be transmitted through a channel. If the input information rate in bits per second \(D\) is less than \(C\) then it is possible (perhaps by dealing with long sequences of inputs together) to code the data in such a way that the error rate is as low as desired. On the other hand, if \(D > C\) then this is not possible; in fact the maximum rate at which information about the input can be inferred from learning the output is \(C\). This result is exactly the same as the result for the noiseless channel, shown in Figure 6.4.

This result is really quite remarkable. A capacity figure, which depends only on the channel, was defined and then the theorem states that a code which gives performance arbitrarily close to this capacity can be found. In conjunction with the source coding theorem, it implies that a communication channel can be designed in two stages—first, the source is encoded so that the average length of codewords is equal to its entropy, and second, this stream of bits can then be transmitted at any rate up to the channel capacity with arbitrarily low error. The channel capacity is not the same as the native rate at which the input can change, but rather is degraded from that value because of the noise.

Unfortunately, the proof of this theorem (which is not given here) does not indicate how to go about finding such a code. In other words, it is not a constructive proof, in which the assertion is proved by displaying the code. In the half century since Shannon published this theorem, there have been numerous discoveries of better and better codes, to meet a variety of high speed data communication needs. However, there is not yet any general theory of how to design codes from scratch (such as the Huffman procedure provides for source coding).

Claude Shannon (1916–2001) is rightly regarded as the greatest figure in communications in all history.\(^2\) He established the entire field of scientific inquiry known today as information theory. He did this work while at Bell Laboratories, after he graduated from MIT with his S.M. in electrical engineering and his Ph.D. in mathematics. It was he who recognized the binary digit as the fundamental element in all communications. In 1956 he returned to MIT as a faculty member. In his later life he suffered from Alzheimer’s disease, and, sadly, was unable to attend a symposium held at MIT in 1998 honoring the 50th anniversary of his seminal paper.

\(^2\)See a biography of Shannon at http://www-groups.dcs.st-andrews.ac....s/Shannon.html