9.4: The Kalman Filter

- Page ID

- 14826

The location of a robot is subject to uncertainty due to wheelslip and encoder noise. We learned in the past how the variance in position can be derived from the variance of the robot’s drivetrain using the error propagation law and the forward kinematics of the robot. One can see that this error is continuously increasing unless the robot has additional observations, e.g., of a static object with known location. This update can be formally done using Bayes’ rule, which relates the likelihood to be at a certain position given that the robot sees a certain feature to the likelihood to see this feature at the hypothetical location. For example, a robot that drives towards a wall will become less and less certain of its position (action update) until it encounters the wall (perception update). It can then use its sensor model that relates its observation with possible positions. Its real location must be therefore somewhere between its original belief and where the sensor tells it to be. Bayes’ rule allows us to perform this location for discrete locations and discrete sensor error distributions. This is inconvenient as we are used to represent our robot’s position with a 2D Gaussian distribution. Also, it seems much easier to just change the mean and variances of this Gaussian instead of updating hundreds of variables. The goals of this section are

- to introduce a technique known as the Kalman filter to perform action and perception updates exclusively using Gaussian distributions.

- to formally introduce the notion of a feature map.

- to develop an example that puts everything we learned so far together: forward kinematics, error propagation and feature estimation.

9.4.1. Probabilistic Map-based localization

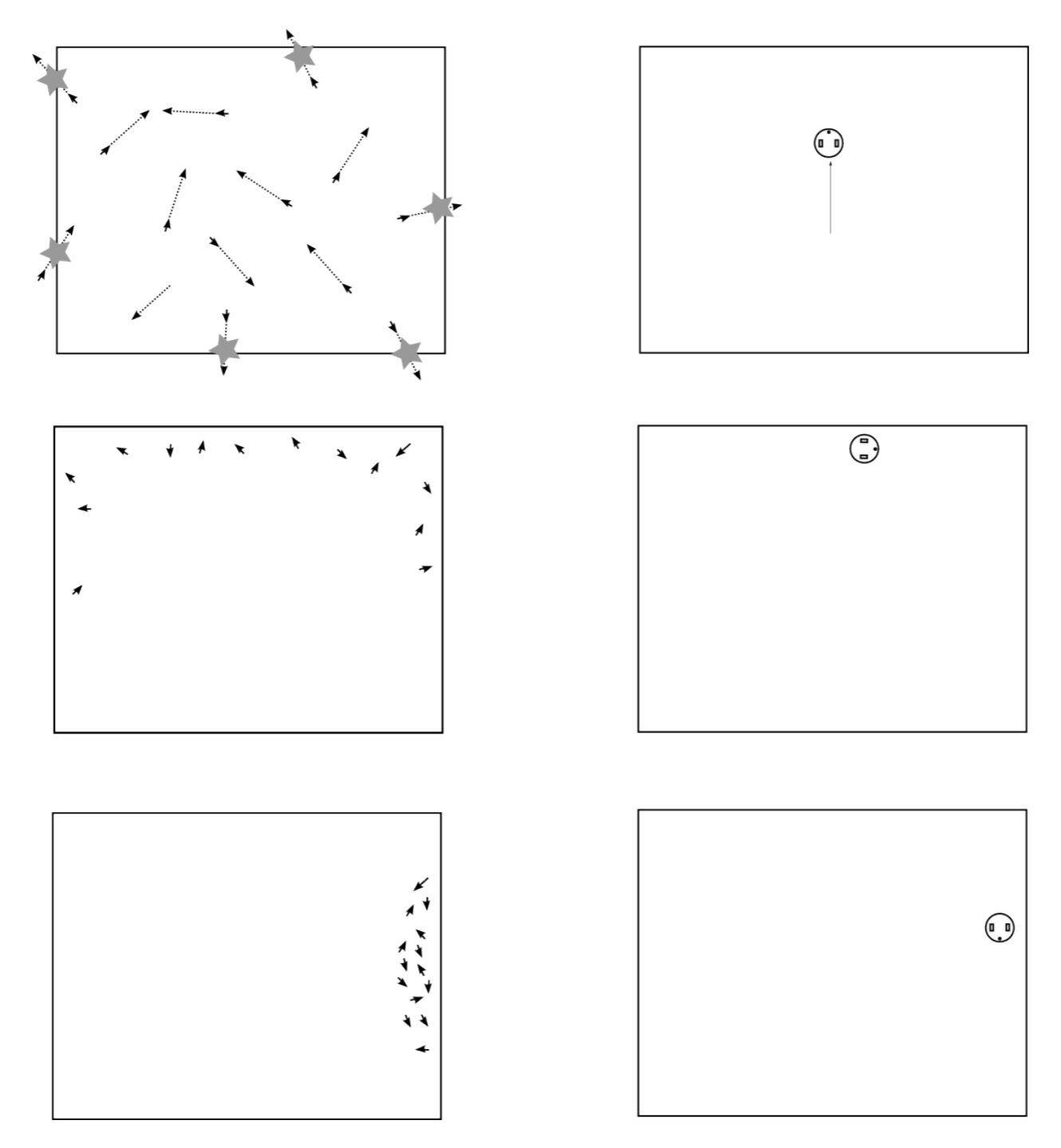

In order to localize a robot using a map, we need to perform the following steps

- Calculate an estimate of our new position using the forward kinematics and knowledge of the wheel-speeds that we sent to the robot until the robot encounters some uniquely identifiable feature.

- Calculate the relative position of the feature (a wall, a landmark or beacon) to the robot.

- Use knowledge of where the feature is located in global coordinates to predict what the robot should see.

- Calculate the difference between what the robot actually sees and what it believes it should see.

- Use the result from (4) to update its belief by weighing each observation with its variance.

Steps 1-2 are based on the sections on “Forward Kinematics” and “Line detection”. Step 3 uses again simple forward kinematics to calculate the position of a feature stored in global coordinates in a map in robot coordinates. Step 4 is a simple subtraction of what the sensor sees and what the map says. Step 5 introduces the Kalman filter. Its derivation is involved, but its intuition is simple: why just averaging between where I think I am and what my sensors tell me, if my sensors are much more reliable and should carry much higher weight?

9.4.2. Optimal Sensor Fusion

The Kalman filter is an optimal way to fuse observations that follow a Gaussian distribution. The Kalman filter has an update and a prediction step. The update step uses a dynamical model of the system (such as the forward kinematics of your robot) and the prediction step uses a sensor model (such as the error distribution calibrated from its sensors). The Kalman filter does not only update the state of the system (the robot’s position) but also its variance. For this, it requires knowledge of all the variances involved in the system (e.g., wheel-slip and sensor error) and uses them to weigh each measurement accordingly. Before providing the equations for the Kalman filter, we will make use of a simple example that explains what “optimal” means in this context.

Let q̂1 and q̂2 be two different estimates of a random variable and σ21 and σ22 their variances, respectively. Let q be the true value. This could be the robot’s position, e.g. The observations have different variances when they are obtained by different means, say using odometry for q̂1 and by using the location of a known feature for q̂2. We can now define the weighted mean-square error

\[S=\sum_{i=1}^{n}\frac{1 }{\sigma_{i}}(q-q̂_{i})^{2}\]

that is, S is the sum of the errors of each observation q̂i weighted by its standard deviation σi . Each error is weighted with its standard deviation to put more emphasis on observations whose standard deviation is low. Minimizing S for n = 2 yields the following optimal expression for q:

\[q=\frac{q̂_{1}\sigma _{2}^{2}}{\sigma _{1}^{2}+\sigma _{2}^{2}}+\frac{q̂_{2}\sigma _{1}^{2}}{\sigma _{1}^{2}+\sigma _{2}^{2}}\]

or, equivalently,

\[q=q̂_{1}+\frac{\sigma _{1}^{2}}{\sigma _{1}^{2}+\sigma _{2}^{2}}(q̂_{2}-q̂_{1})\]

We have now derived an expression for fusing two observations with different errors that provably minimizes the error between our estimate and the real value. As q is a linear combination of two random variables (Section C.4, the new variance is given by

\[\sigma ^{2}=\frac{1}{\frac{1}{\sigma _{1}^{2}}+\frac{1}{\sigma _{2}^{2}}}\]

Interestingly, the resulting variance is smaller than both σ1 and σ2, that is, adding additional observation always helps reducing accuracy instead of introducing more uncertainty.

9.4.3. Integrating Prediction and Update: The Kalman Filter

Although we have introduced the problem above as fusing two observations of the same quantity and weighting them by their variance, we can also interpret the equation above as an update step that calculates a new estimate of an observation based on its old estimate and a measurement. Remember step (4) from above: q̂2 − q̂1 is nothing else then the difference between what the robot actually sees and what it thinks it should see. This term is known as innovation in Kalman lingo. We can now rewrite (9.4.3) from above into

\[x̂_{k+1}=x̂_{k}+K_{k+1}y_{k+1}\]

Here, x̂k is the state we are interested in at time k, Kk+1 = σ21 /(σ21+σ22) the Kalman gain, and yk+1 = q̂2 − q̂1 the innovation. Unfortunately, there are few systems that allow us to directly measure the information we are interested in. Rather, we obtain a sensor measurement zk that we need to convert into our state somehow. You can think about this the other way too and predict your measurement zk from your state xk. This is done using the observation model Hk, so that

\[y_{k}=z_{k}-H_{k}x_{k}\]

In our example Hk was just the identity matrix; in a robot position estimation problem Hk is a function that would predict how a robot would see a certain feature. As you can see, all the weighting based on variances is done in the Kalman gain K. The perception update step shown above, also known as prediction step is only half of what the Kalman filter does. The first step is the update step, which corresponds to the action update we already know. In fact, the variance update in the Kalman filter is exactly the same as we learned during error propagation. Before going into any more details on the Kalman filter, it is time for a brief disclaimer: the Kalman filter only works for linear systems. Forward kinematics of even the simplest robots are mostly non-linear, and so are observation models that relate sensor observations and the robot position. Non-linear systems can be dealt with the Extended Kalman Filter.