4.2: The Strong Law of Large Numbers and Convergence WP1

- Page ID

- 44615

The concept of a sequence of rv’s converging with probability 1 (WP1) was introduced briefly in Section 1.5.6. We discuss this type of convergence more fully here and establish some conditions under which it holds. Next the strong law of large numbers (SLLN) is stated for IID rv’s (this is essentially the result that the partial sample averages of IID rv’s converge to the mean WP1). A proof is given under the added condition that the rv’s have a finite fourth moment. Finally, in the following section, we state the strong law for renewal processes and use the SLLN for IID rv’s to prove it.

Convergence with probability 1 (WP1)

Recall that a sequence \(\left\{Z_{n} ; n \geq 1\right\}\) of rv’s on a sample space \(\Omega\) is defined to converge WP1 to a rv \(Z\) on \(\Omega\) if

\(\operatorname{Pr}\left\{\omega \in \Omega: \lim _{n \rightarrow \infty} Z_{n}(\omega)=Z(\omega)\right\}=1,\)

i.e., if the set of sample sequences \(\left\{Z_{n}(\omega) ; n \geq 1\right\}\) that converge to \(Z(\omega)\) has probability 1. This becomes slightly easier to understand if we define \(Y_{n}=Z_{n}-Z\) for each \(n\). The sequence \(\left\{Y_{n} ; n \geq 1\right\}\) then converges to 0 WP1 if and only if the sequence \(\left\{Z_{n} ; n \geq 1\right\}\) converges to \(Z\) WP1. Dealing only with convergence to 0 rather than to an arbitrary rv doesn’t cut any steps from the following proofs, but it simplifies the notation and the concepts.

We start with a simple lemma that provides a useful condition under which convergence to 0 WP1 occurs. We shall see later how to use this lemma in an indirect way to prove the SLLN.

Let \(\left\{Y_{n} ; n \geq 1\right\}\) be a sequence of rv’s, each with finite expectation. If \(\sum_{n=1}^{\infty} \mathrm{E}\left[\left|Y_{n}\right|\right]<\infty\), then \(\operatorname{Pr}\left\{\omega: \lim _{n \rightarrow \infty} Y_{n}(\omega)=0\right\}=1\).

- Proof

-

For any \(\alpha\), \(0<\alpha<\infty\) and any integer \(m \geq 1\), the Markov inequality says that

\[\operatorname{Pr}\left\{\sum_{n=1}^{m}\left|Y_{n}\right|>\alpha\right\} \leq \frac{\mathrm{E}\left[\sum_{n=1}^{m}\left|Y_{n}\right|\right]}{\alpha}=\frac{\sum_{n=1}^{m} \mathrm{E}\left[\left|Y_{n}\right|\right]}{\alpha}\label{4.3} \]

Since \(\left|Y_{n}\right|\) is non-negative, \(\sum_{n=1}^{m}\left|Y_{n}\right|>\alpha\) implies that \(\sum_{n=1}^{m+1}\left|Y_{n}\right|>\alpha\). Thus the left side of \ref{4.3} is nondecreasing in \(m\) and we can go to the limit

\(\lim _{m \rightarrow \infty} \operatorname{Pr}\left\{\sum_{n=1}^{m}\left|Y_{n}\right|>\alpha\right\} \leq \frac{\sum_{n=1}^{\infty} \mathrm{E}\left[\left|Y_{n}\right|\right]}{\alpha}.\)

Now let \(A_{m}=\left\{\omega: \sum_{n=1}^{m}\left|Y_{n}(\omega)\right|>\alpha\right\}\). As seen above, the sequence \(\left\{A_{m} ; m \geq 1\right\}\) is nested, \(A_{1} \subseteq A_{2} \cdots\), so from property \ref{1.9} of the axioms of probability,4

\[\begin{aligned}

\lim _{m \rightarrow \infty} \operatorname{Pr}\left\{\sum_{n=1}^{m}\left|Y_{n}\right|>\alpha\right\} &=\operatorname{Pr}\left\{\bigcup_{m=1}^{\infty} A_{m}\right\} \\

&=\operatorname{Pr}\left\{\omega: \sum_{n=1}^{\infty}\left|Y_{n}(\omega)\right|>\alpha\right\},

\end{aligned}\label{4.4} \]where we have used the fact that for any given \(\omega\), \(\sum_{n=1}^{\infty}\left|Y_{n}(\omega)\right|>\alpha\) if and only if \(\sum_{n=1}^{m}\left|Y_{n}(\omega)\right|>\alpha\) for some \(m \geq 1\). Combining \ref{4.3} with (4.4),

\(\operatorname{Pr}\left\{\omega: \sum_{n=1}^{\infty}\left|Y_{n}(\omega)\right|>\alpha\right\} \leq \frac{\sum_{n=1}^{\infty} \mathrm{E}\left[\left|Y_{n}\right|\right]}{\alpha}\).

Looking at the complementary set and assuming \(\alpha>\sum_{n=1}^{\infty} \mathrm{E}\left[\left|Y_{n}\right|\right]\),

\[\operatorname{Pr}\left\{\omega: \sum_{n=1}^{\infty}\left|Y_{n}(\omega)\right| \leq \alpha\right\} \geq 1-\frac{\sum_{n=1}^{\infty} \mathrm{E}\left[\left|Y_{n}\right|\right]}{\alpha}\label{4.5} \]

For any ω such that \(\sum_{n=1}^{\infty}\left|Y_{n}(\omega)\right| \leq \alpha\), we see that \(\left\{\left|Y_{n}(\omega)\right| ; n \geq 1\right\}\) is simply a sequence n=1 of non-negative numbers with a finite sum. Thus the individual numbers in that sequence must approach 0, i.e., \(\lim _{n \rightarrow \infty}\left|Y_{n}(\omega)\right|=0\) for each such ω. It follows then that

\(\operatorname{Pr}\left\{\omega: \lim _{n \rightarrow \infty}\left|Y_{n}(\omega)\right|=0\right\} \geq \operatorname{Pr}\left\{\omega: \sum_{n=1}^{\infty}\left|Y_{n}(\omega)\right| \leq \alpha\right\}\).

Combining this with (4.5),

\(\operatorname{Pr}\left\{\omega: \lim _{n \rightarrow \infty}\left|Y_{n}(\omega)\right|=0\right\} \geq 1-\frac{\sum_{n=1}^{\infty} \mathrm{E}\left[\left|Y_{n}\right|\right]}{\alpha}\)

This is true for all \(\alpha\), so \(\operatorname{Pr}\left\{\omega: \lim _{n \rightarrow \infty}\left|Y_{n}\right|=0\right\}=1\), and thus \(\operatorname{Pr}\left\{\omega: \lim _{n \rightarrow \infty} Y_{n}=0\right\}=1\).

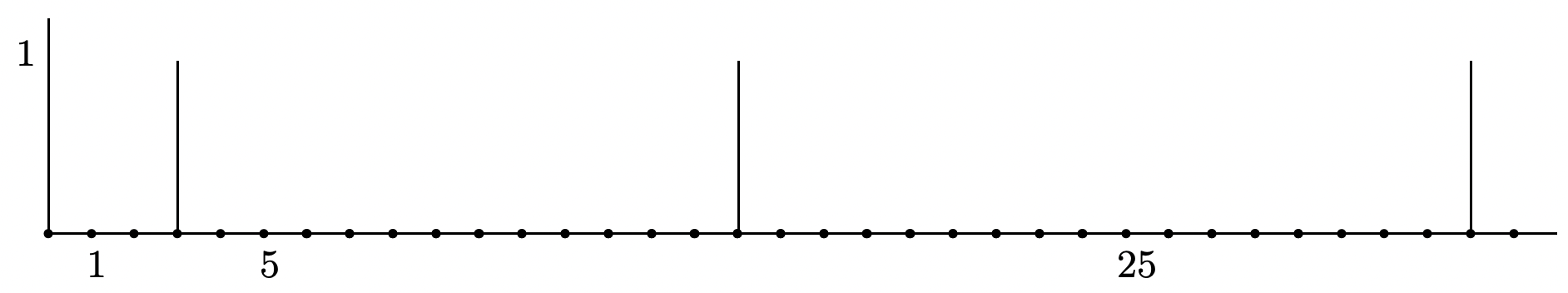

It is instructive to recall Example 1.5.1, illustrated in Figure 4.1, where \(\left\{Y_{n} ; n \geq 1\right\}\) converges in probability but does not converge with probability one. Note that \(\mathrm{E}[Y_n]=1 /\left(5^{j+1}-1\right)\) for \(n \in\left[5^{j}, 5^{j+1}\right)\). Thus \(\lim _{n \rightarrow \infty} \mathrm{E}\left[Y_{n}\right]=0\), but \(\sum_{n=1}^{\infty} \mathrm{E}\left[Y_{n}\right]=\infty\). Thus this sequence does not satisfy the conditions of the lemma. This helps explain how the conditions in the lemma exclude such sequences.

Before proceeding to the SLLN, we want to show that convergence WP1 implies convergence in probability. We give an incomplete argument here with precise versions both in Exercise 4.5 and Exercise 4.6. Exercise 4.6 has the added merit of expressing the set \(\{\omega: \lim _{n} Y_{n}(\omega)=0\}\) explicitly in terms of countable unions and intersections of simple events involving finite sets of the \(Y_{n}\). This representation is valid whether or not the conditions of the lemma are satisfied and shows that this set is indeed an event.

Figure 4.1: Illustration of a sample path of a sequence of rv’s \(\left\{Y_{n} ; n \geq 0\right\}\) where, for each \(j \geq 0\), \(Y_{n}=1\) for an equiprobable choice of \(n \in\left[5^{j}, 5^{j+1}\right)\) and \(Y_{n}=0\) otherwise. Assume that \(\left\{Y_{n} ; n \geq 1\right\}\) is a sequence of rv’s such that \(\lim _{n \rightarrow \infty}\left(Y_{n}\right)=0\) WP1. Then for any \(\epsilon>0\), each sample sequence \(\left\{Y_{n}(\omega) ; n \geq 1\right\}\) that converges to 0 satisfies \(\left|Y_{n}\right| \leq \epsilon\) for all sufficiently large \(n\). This means (see Exercise 4.5) that \(\lim _{n \rightarrow \infty} \operatorname{Pr}\left\{\left|Y_{n}\right| \leq \epsilon\right\}=1\). Since this is true for all \(\epsilon>0\), \(\left\{Y_{n} ; n \geq 0\right\}\) converges in probability to 0.

Strong law of large numbers (SLLN)

We next develop the strong law of large numbers. We do not have the mathematical tools to prove the theorem in its full generality, but will give a fairly insightful proof under the additional assumption that the rv under discussion has a finite 4th moment. The theorem has a remarkably simple and elementary form, considering that it is certainly one of the most important theorems in probability theory. Most of the hard work in understanding the theorem comes from understanding what convergence WP1 means, and that has already been discussed. Given this understanding, the theorem is relatively easy to understand and surprisingly easy to prove (assuming a 4th moment).

For each integer \(n \geq 1\), let \(S_{n}=X_{1}+\cdots+X_{n}\), where \(X_{1}, X_{2}, \ldots\) are IID rv’s satisfying \(E[|X|]<\infty\). Then

\[\operatorname{Pr}\left\{\omega: \lim _{n \rightarrow \infty} \frac{S_{n}(\omega)}{n}=\bar{X}\right\}=1.\label{4.6} \]

- Proof (for the case where \(\bar{X}=0\) and \(\left.\mathrm{E}\left[X^{4}\right]<\infty\right)\)):

-

Assume that \(\bar{X}=0\) and \(\mathrm{E}\left[X^{4}\right]<\infty\). Denote \(\mathrm{E}\left[X^{4}\right]\) by \(\gamma\). For any real number \(x\), if \(|x| \leq 1\), then \(x^{2} \leq 1\), and if \(|x|>1\), then \(x^{2}<x^{4}\). Thus \(x^{2} \leq 1+x^{4}\) for all \(x\). It follows \(\sigma^{2}=\mathrm{E}\left[X^{2}\right] \leq 1+\mathrm{E}\left[X^{4}\right]\). Thus \(\sigma^{2}\) is finite if \(\mathrm{E}\left[X^{4}\right]\) is.

Now let \(S_{n}=X_{1}+\cdots+X_{n}\) where \(X_{1}, \ldots, X_{n}\) are IID with the distribution of \(X\).

\(\begin{aligned}

\mathrm{E}\left[S_{n}^{4}\right] &=\mathrm{E}\left[\left(X_{1}+\cdots+X_{n}\right)\left(X_{1}+\cdots+X_{n}\right)\left(X_{1}+\cdots+X_{n}\right)\left(X_{1}+\cdots+X_{n}\right)\right] \\

&=\mathrm{E}\left[\left(\sum_{i=1}^{n} X_{i}\right)\left(\sum_{j=1}^{n} X_{j}\right)\left(\sum_{k=1}^{n} X_{k}\right)\left(\sum_{\ell=1}^{n} X_{\ell}\right)\right] \\

&=\sum_{i=1}^{n} \sum_{j=1}^{n} \sum_{k=1}^{n} \sum_{\ell=1}^{n} \mathrm{E}\left[X_{i} X_{j} X_{k} X_{\ell}\right]

\end{aligned}\),where we have multiplied out the product of sums to get a sum of \(n^{4}\) terms.

For each \(i\), \(1 \leq i \leq n\), there is a term in this sum with \(i=j=k=\ell\). For each such term, \(\mathrm{E}\left[X_{i} X_{j} X_{k} X_{\ell}\right]=\mathrm{E}\left[X^{4}\right]=\gamma\). There are \(n\) such terms (one for each choice of \(i\), \(1 \leq i \leq n\)) and they collectively contribute \(n \gamma\) to the sum \(\mathrm{E}\left[S_{n}^{4}\right]\). Also, for each \(i\), \(k \neq i\), there is a term with \(j=i\) and \(\ell=k\). For each of these \(n(n-1)\) terms, \(\mathrm{E}\left[X_{i} X_{i} X_{k} X_{k}\right]=\sigma^{4}\). There are another \(n(n-1)\) terms with \(j \neq i\) and \(k=i\), \(\ell=j\). Each such term contributes \(\sigma^{4}\) to the sum. Finally, for each \(i \neq j\), there is a term with \(\ell=i\) and \(k=j\). Collectively all of these terms contribute \(3 n(n-1) \sigma^{4}\) to the sum. Each of the remaining terms is 0 since at least one of \(i, j, k, \ell\) is different from all the others, Thus we have

\(\mathrm{E}\left[S_{n}^{4}\right]=n \gamma+3 n(n-1) \sigma^{4}\).

Now consider the sequence of rv’s \(\left\{S_{n}^{4} / n^{4} ; n \geq 1\right\}\).

\(\sum_{n=1}^{\infty} \mathrm{E}\left[\left|\frac{S_{n}^{4}}{n^{4}}\right|\right]=\sum_{n=1}^{\infty} \frac{n \gamma+3 n(n-1) \sigma^{4}}{n^{4}}<\infty\),

where we have used the facts that the series \(\sum_{n \geq 1} 1 / n^{2}\) and the series \(\sum_{n \geq 1} 1 / n^{3}\) converge.

Using Lemma 4.2.1 applied to \(\left\{S_{n}^{4} / n^{4} ; n \geq 1\right\}\), we see that \(\lim _{n \rightarrow \infty} S_{n}^{4} / n^{4}=0\) WP1. For each ω such that \(\lim _{n \rightarrow \infty} S_{n}^{4}(\omega) / n^{4}=0\), the nonnegative fourth root of that sequence of n nonnegative numbers also approaches 0. Thus \(\lim _{n \rightarrow \infty}\left|S_{n} / n\right|=0\) WP1.

The above proof assumed that \(\mathrm{E}[X]=0\). It can be extended trivially to the case of an arbitrary finite \(\bar{X}\) by replacing \(X\) in the proof with \(X-\bar{X}\). A proof using the weaker condition that \(\sigma_{X}^{2}<\infty\) will be given in Section 7.9.1.

The technique that was used at the end of this proof provides a clue about why the concept of convergence WP1 is so powerful. The technique showed that if one sequence of rv’s \(\left(\left\{S_{n}^{4} / n^{4} ; n \geq 1\right\}\right)\) converges to 0 WP1, then another sequence \(\left.\left(\left|S_{n} / n\right| ; n \geq 1\right\}\right)\) also converges WP1. We will formalize and generalize this technique in Lemma 4.3.2 as a major step toward establishing the strong law for renewal processes.

4This proof probably appears to be somewhat nitpicking about limits. The reason for this is that the argument is quite abstract and it is difficult to develop the kind of intuition that ordinarily allows us to be somewhat more casual.